Relevant Elephants: Fixing Canvas Commons Search

| This idea has been developed and deployed to Canvas |

I'm not really sure how to write this as a feature idea because, as far as I am concerned, it is a bug that needs to be fixed. But my dialogue with the Helpdesk went nowhere (Case #03332722), so I am submitting it as a feature request per their advice. I am not proposing how to fix this problem; I am just going to document the problem. People who actually know something about repository search would need to be the ones to propose the best set of search features; I am not an expert in repository search and how to fix a defective search algorithm. But as a user, I can declare that this is a serious problem.

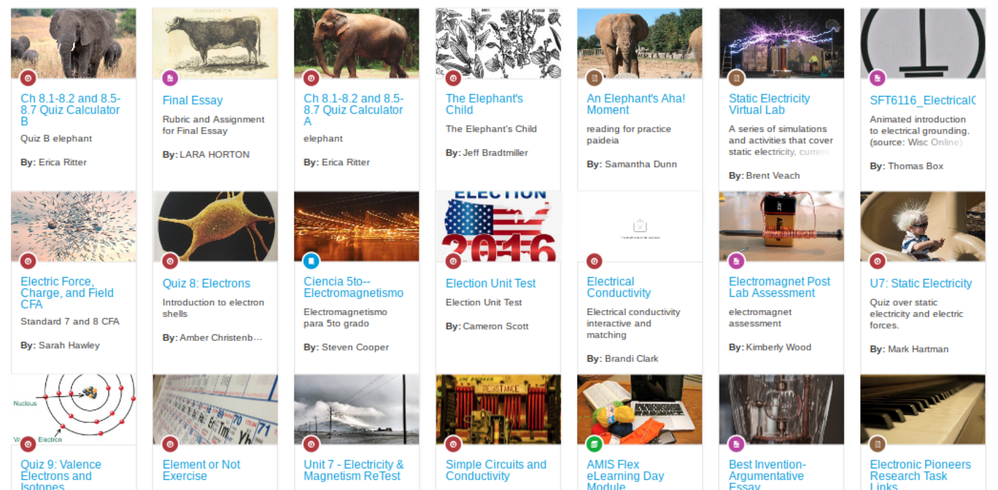

If you search Canvas Commons for elephants, you get 1084 results. Here are the "most relevant" results:

https://lor.instructure.com/search?sortBy=relevance&q=elephant

There are some elephants, which is good... but a lot of other things that start with ele- ... which is not good. Apparently there is some component in the search algorithm which returns anything (ANYTHING) that matches the first three characters of the search string.

Election Unit Test.

Static Electricity Virtual Lab.

And so on. And so on. Over 1000 false positives. ele-NOT-elephants.

As near as I can tell, there might be a dozen elephants in Canvas Commons. I've found six for sure; there could be more... it's impossible to find them. Impossible because of the way that search works (or doesn't work). The vast -- VAST -- majority of results are for electricity, elections, elearning, elements, elementary education, electrons, and anything else that starts with ele.

You might hope that all the elephants are there at the start of the "most relevant" search results... but you would be wrong. There are 5 elephants up at the top, but then "Static Electricity Virtual Lab" and "Valence Electrons and Isotopes" etc. etc. are considered more relevant than Orwell's essay "Shooting an Elephant" (there's a quiz for that). I have yet to figure out why Static Electricity Virtual Lab is considered a more relevant search result for "elephant" than materials for George Orwell's Elephant essay which actually involves an elephant.

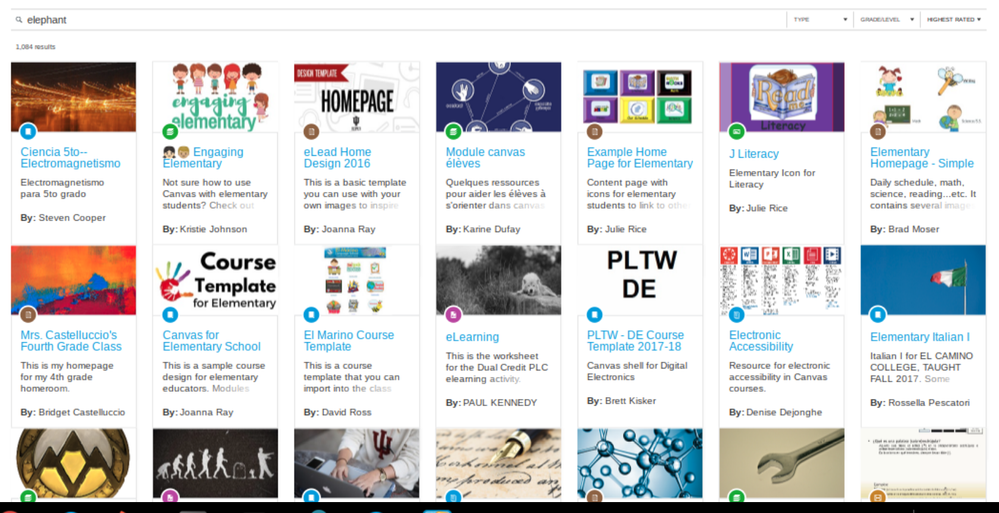

I found out about Orwell's Elephant this way: when I search for "Highest Rated," the top-rated elephant is Orwell's elephant. There are lots of other highest rated items at the top, though, which have nothing to do with elephants, and that is why you cannot see Orwell's elephant in my screenshot. It's below all these other items in the screenshot. But if you scroll on down, you will find Orwell's elephant essay. Eventually.

I found it using Control-F in my browser.

Here is the search URL:

https://lor.instructure.com/search?sortBy=rating&q=elephant

Switch the view to "Latest" and all the elephants are missing here too. Really missing. Well, you'll get to them eventually I guess if you keep loading more and more... and more and more.. and more. But no one is going to scroll and load and scroll and load to find the elephants, right?

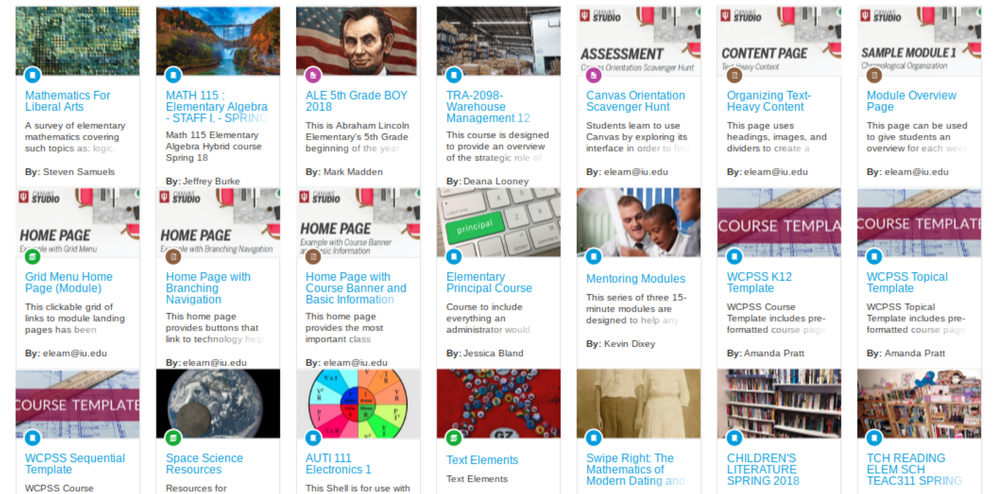

Here's the search term: elephant. But the search results are for ele- like elementary mathematics, elementary algebra, "Abraham Lincoln Elementary's 5th Grade beginning of the year prompt," and " the elements involved in warehouse management," and so on.

https://lor.instructure.com/search?sortBy=date&q=elephant

I hopefully tried putting quotation marks around the word "elephant" to see if that would help. It did not.

The Helpdesk tells me that this is all on purpose in order to help people with spelling errors:

That is how our search engine was set up. To search as specific as it can get and then to gradually filter in less specific content. This is done so that if a word is misspelled the search is still able to locate it.

if I type "elphant," then Google search shows me results for "elephant." That sounds good. It corrected my typo. But Canvas Commons gives me no elephants if I type "elphant." Instead it gives me two things: an item submitted by someone named Elpidio, and something called "Tech PD and Educational Technology Standards" which involves the acronym ELP. So much for helping people with spelling errors.

Electricity, elections, elements, elearning: these do not sound good. Those results are obstructing the search; they are not helping. There is nothing "gradual" about the filtering. Static electricity shows up as more relevant than George Orwell's elephant. Some kind of three-character search string is driving the algorithm to the exclusion of actual elephant matches.

If you assume that someone who typed ELEPHANT really meant to type ELECTRICITY or perhaps ELEARNING, well, that is worse than any autocorrect I have ever seen. And I have seen some really bad autocorrect.

This happens over and over again; it affects every search.

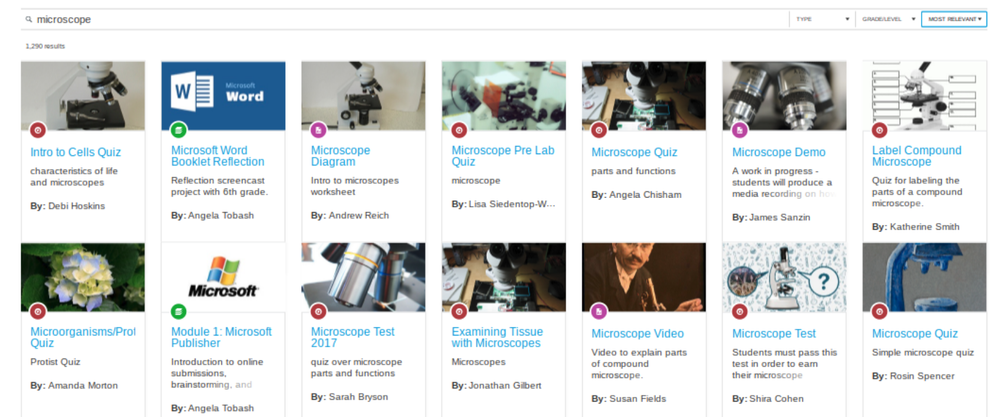

Want to search for microsopes? Get ready for a lot of Microsoft. These are supposedly the most relevant microscope search results, but the second item is Microsoft ... even though it doesn't seem to have anything to do with microscopes at all from what I can tell.

https://lor.instructure.com/search?sortBy=relevance&q=microscope

Still, we're doing better than with the elephants here. There are a lot of microscopes in addition to Microsoft:

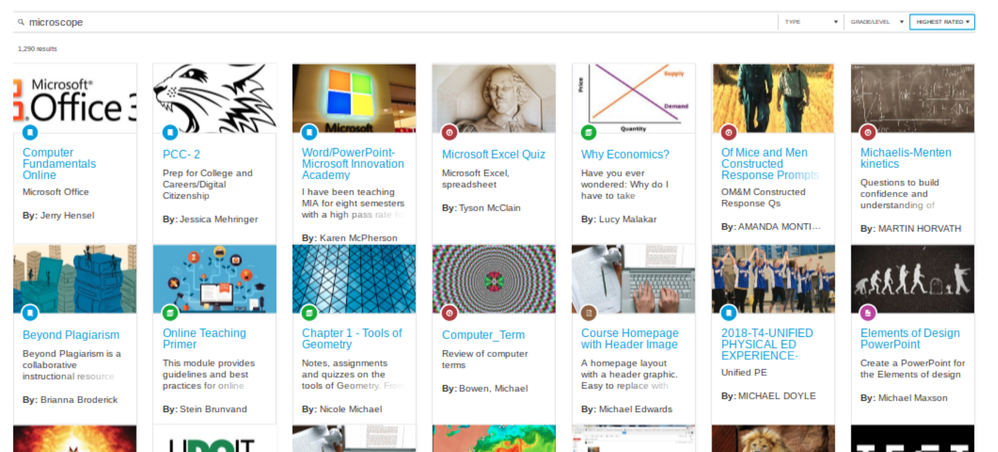

But look what happens if you want highest-rated microscopes. See the screenshot; there are no microscopes. It's Microsoft Microsoft Microsoft. But hey, there is also Of Mice and Men!

https://lor.instructure.com/search?sortBy=rating&q=microscope

So, the search algorithm assumes that, while I typed "microscope" as my search term, I might really have meant to type "Of Mice and Men." Or Microsoft. Or the name Michael (a lot of content contributors are named Michael) (or Michelle).

I could go on. But I hope everybody gets the idea. If this is really a feature of Canvas Commons search and not a bug (???), I hope this three-character search string "feature" can be replaced with a better set of search features.

Although I still would call this three-character approach to search a bug, not a feature. Which is to say: I hope we don't have to wait a couple years for the (slow and uncertain) feature request process to warrant its reexamination.

| Comments from Instructure |

For more information, please read through the https://community.canvaslms.com/docs/DOC-15588-canvas-release-notes-2018-10-27 .

Added to Theme

Completed Ideas that pre-date the Ideas and Themes structure Theme Status: Delivered