The Instructure Community will enter a read-only state on November 22, 2025 as we prepare to migrate to our new Community platform in early December. Read our blog post for more info about this change.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

[ARCHIVED] Getting SQS message to SQL Database

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-14-2018

08:40 AM

We are beginning to look at using #canvas live events and am wondering if there is anyone out there currently using them and could provide us with some best practices of how to use the events and getting the event message data out of the Amazon SQS and into a SQL database table where you can actually use the data from the message to produce reports.

Solved! Go to Solution.

1 Solution

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-14-2018

12:56 PM

Hi @bneporadny ,

TLDR; can you tell we're excited about this! :smileygrin:

I have been working on this solution for about 6 months now. I have designed it to be shared with the Canvas Community and it has gone through several iterations. I recently got over a few hurdles after working with Canvas on some details and then they shifted some resources to improving Live Events, and if you look at the Roadmap are working on releasing some of those improvements over the next few months. Some of those improvements are to handle an increased volume of schools deciding to use Live Events and how they can consume them (SQS or HTTPS), subscribe to them (in the UI) and changing which to use at the UI level (Caliper vs Canvas Raw). Currently Canvas has to have an engineer set it up for each instance. Because of these shifts and improvements, I told them I'd hold off on releasing the code to prevent an influx of requests before they had everything in place.

With that said... I was planning on a more formal release, blog post and explanation of the code early next year, but would be happy to share what I've been working on and why. So...

I was listening to Huddie Ledbetter aka Leadbelly's blues music one morning when I decided that was a fantastic name for my Live Events Daemon. LEDbelly, as I've come to call it, is a lightweight daemon that runs on a system (currently my Mac Mini while I build our new task server), connects to Amazon SQS, consumes messages for either Canvas Raw or Caliper format, and can push them into SQL.

Because I've used numerous community contributions over the years to help run our processes, including the many contributions of @James ... and when I was working on this and found few resources and mentions of Live Events here, I was inspired to write the code with the intention of making it available to anyone.

Some of my choices around Canvas Community were asking what databases are used so that I could support them. I can currently push events into MSSQL, MySQL, PostgreSQL and would like to support Oracle, but am waiting on my dev environment to be setup and anyone (using Oracle) in the community to answer a few questions.

My intention in making this available:

There are better ways to handle this data... (Splunk, AWS)

There are schools with money who can build nice data lakes and process this data on AWS...

we don't have that funding or the skills, yet. many of us don't, this is for us!

Not everyone is a data analyst, programmer, or understands Big Data

For the rest of us, SQL is the only way to handle data... unstructured or not

If we all write our own programs we're all reinventing the wheel instead of improving it

I'm hopeful there is interest from the community to collaborate and build something we can all use...

... if not, it will be available as is (as I add to it) for your use or as a starter kit. I will be contributing this to our Github account early 2019 when Canvas can handle the increased volume and turning on LE for Canvas is easier.

Since you asked about best practices and how to use it, I'll share some details.

I will definitely be writing another article on this soon but would like to give you some features now.

Ledbelly

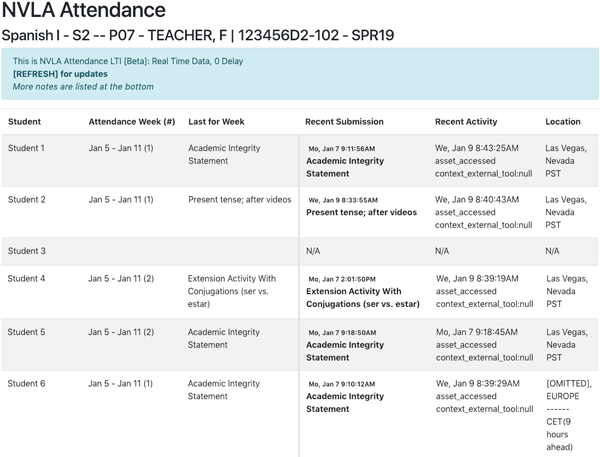

When not running in the background I can watch events happening in real time. The date is old because I tried to post a 60 second video last month but Twitter hated the format.

Multi-threaded daemon for consuming SQS messages quickly... Multi threading is necessary because sometimes you'll see one or two events a second, or a minute... during peak times many many more. This is especially necessary since multiple events happen at the same time, I found that importing 1 message at a time in SQL would quickly create a backup and latency as I couldn't insert them fast enough.

Here's a screen grab from our internal ops dashboard smithb_ccsd developed to keep an eye on all our in-out processes.

Connection pooling, just like multi threading, the database connection needs to be able to handle increases and decreases in the amount of transactions happening in the database.

The rest of the features are pretty basic. I chose the Canvas Raw Format vs the Caliper format because its compatible with Canvas Data and is easier to consume, and it has more events. Both can be consumed at the same time. The code is currently set up to import the Caliper Format into SQL with underscore notation and dynamically generate tables in a test environment... I do this to track changes and help answer questions that pop up. Thoughts on that here... https://community.canvaslms.com/docs/DOC-15741-event-type-by-format#comment-132790

For the Canvas Raw format, I have mapped the event fields, setup data types and have generated DDLs for the 3 databases I mentioned above and written code to dynamically generate those DDLs when I needed an update or to create documentation. Live Events changes quite often, just this week they added client_ip to many of the events. I've been trying to help update the documentation, by creating Pull Request #1379 with an update to the API doc...

canvas-lms/live_events.md at live-events-documentation · robert-carroll/canvas-lms · GitHub

I've spent a decent amount of time trying to track the changes in the Canvas Github commits to keep the code up to date. On the off chance that I miss something in the code here are the exception handlers...

New Events... or unidentified events the code wasn't expecting. If a new event is sent to the SQS stream and the code isn't ready for it we'd either throw an error or (because I'm using a Case statement) would completely ignore it. When this happens I instead push that event to a secondary SQS queue (mine is called -moo, because cows have multiple bellies and I'm a nerd). This allows me to catch the event, evaluate it, and update the code. I can also log the payload to a local file.

Field Count... sometimes new fields appear in the event the code wasn't expecting, and I use this method (which is written to an error file) to evaluate the field, assess its data type and update the schema and the code. Otherwise the code would quietly continue working and never add that data to the database.

---

One of the things I've tried to do is prevent myself from making assumptions for the community where we'd probably benefit from collaborating on best practices. Currently, I push all events into their own table, which makes it easier to join data or update data with Canvas Data... but just yesterday I added a new method which grabs common fields in all events and pushes them to a live_stream table, which I use for user activity. This allows me to create faster views when trying to associate things in Canvas data for Users vs Records... joins are slow, so I currently feel like the speed benefit is worth the duplication. Also since Canvas Data will pick up most of this information within 48 hours... we probably don't need to store it for too long. But I haven't found how long I should hold on to it yet...

If you are already using Canvas Data, a lot of the events can be appended to reports to giving you the most updated submission timestamp or activity for a user, this helps extend Canvas Data since your last dump/import.

One thing we've been working on is displaying current number of users who are online and eliminating noise from the Requests Table which I... The Problem with the Requests Table. I can easily eliminate Amazon Data Centers (backend Canvas tasks) from current user activity. I have not quite figured out yet how to eliminate all noise from the table... but not entirely sure now if that's even necessary. I had originally asked for an IP address in the Login Event... but with IP in almost all events... the Requests Table starts becoming more useful for troubleshooting DevOps and random questions CD won't answer like this and this, not user activity...

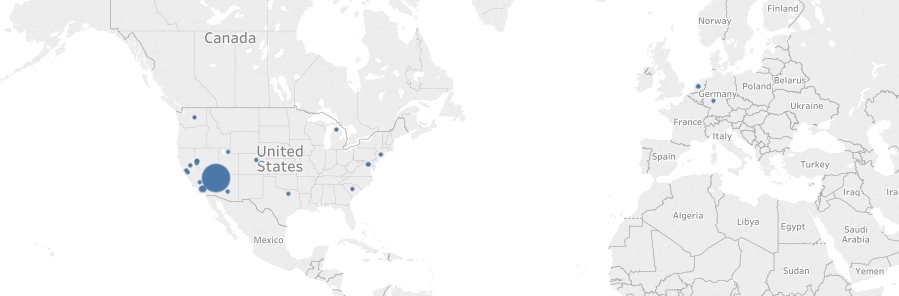

User locations in the last 2 hours... (I append geo location data to this and view it in Tableau)

Last but not least, with Canvas Data... since we have over 350 schools. I can use the context_id from the event, get the course_id then the account_id and list the current users in Canvas at each School.

That's just who and how many data... Live Events is extremely useful in allowed applications and processes to act in real time instead of off old Canvas Data or static Account Reports.

If you already have Live Events, we can possibly start collaborating before I push the code publically.

Please feel free to ask any questions or DM me.

22 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-14-2018

12:56 PM

Hi @bneporadny ,

TLDR; can you tell we're excited about this! :smileygrin:

I have been working on this solution for about 6 months now. I have designed it to be shared with the Canvas Community and it has gone through several iterations. I recently got over a few hurdles after working with Canvas on some details and then they shifted some resources to improving Live Events, and if you look at the Roadmap are working on releasing some of those improvements over the next few months. Some of those improvements are to handle an increased volume of schools deciding to use Live Events and how they can consume them (SQS or HTTPS), subscribe to them (in the UI) and changing which to use at the UI level (Caliper vs Canvas Raw). Currently Canvas has to have an engineer set it up for each instance. Because of these shifts and improvements, I told them I'd hold off on releasing the code to prevent an influx of requests before they had everything in place.

With that said... I was planning on a more formal release, blog post and explanation of the code early next year, but would be happy to share what I've been working on and why. So...

I was listening to Huddie Ledbetter aka Leadbelly's blues music one morning when I decided that was a fantastic name for my Live Events Daemon. LEDbelly, as I've come to call it, is a lightweight daemon that runs on a system (currently my Mac Mini while I build our new task server), connects to Amazon SQS, consumes messages for either Canvas Raw or Caliper format, and can push them into SQL.

Because I've used numerous community contributions over the years to help run our processes, including the many contributions of @James ... and when I was working on this and found few resources and mentions of Live Events here, I was inspired to write the code with the intention of making it available to anyone.

Some of my choices around Canvas Community were asking what databases are used so that I could support them. I can currently push events into MSSQL, MySQL, PostgreSQL and would like to support Oracle, but am waiting on my dev environment to be setup and anyone (using Oracle) in the community to answer a few questions.

My intention in making this available:

There are better ways to handle this data... (Splunk, AWS)

There are schools with money who can build nice data lakes and process this data on AWS...

we don't have that funding or the skills, yet. many of us don't, this is for us!

Not everyone is a data analyst, programmer, or understands Big Data

For the rest of us, SQL is the only way to handle data... unstructured or not

If we all write our own programs we're all reinventing the wheel instead of improving it

I'm hopeful there is interest from the community to collaborate and build something we can all use...

... if not, it will be available as is (as I add to it) for your use or as a starter kit. I will be contributing this to our Github account early 2019 when Canvas can handle the increased volume and turning on LE for Canvas is easier.

Since you asked about best practices and how to use it, I'll share some details.

I will definitely be writing another article on this soon but would like to give you some features now.

Ledbelly

When not running in the background I can watch events happening in real time. The date is old because I tried to post a 60 second video last month but Twitter hated the format.

Multi-threaded daemon for consuming SQS messages quickly... Multi threading is necessary because sometimes you'll see one or two events a second, or a minute... during peak times many many more. This is especially necessary since multiple events happen at the same time, I found that importing 1 message at a time in SQL would quickly create a backup and latency as I couldn't insert them fast enough.

Here's a screen grab from our internal ops dashboard smithb_ccsd developed to keep an eye on all our in-out processes.

Connection pooling, just like multi threading, the database connection needs to be able to handle increases and decreases in the amount of transactions happening in the database.

The rest of the features are pretty basic. I chose the Canvas Raw Format vs the Caliper format because its compatible with Canvas Data and is easier to consume, and it has more events. Both can be consumed at the same time. The code is currently set up to import the Caliper Format into SQL with underscore notation and dynamically generate tables in a test environment... I do this to track changes and help answer questions that pop up. Thoughts on that here... https://community.canvaslms.com/docs/DOC-15741-event-type-by-format#comment-132790

For the Canvas Raw format, I have mapped the event fields, setup data types and have generated DDLs for the 3 databases I mentioned above and written code to dynamically generate those DDLs when I needed an update or to create documentation. Live Events changes quite often, just this week they added client_ip to many of the events. I've been trying to help update the documentation, by creating Pull Request #1379 with an update to the API doc...

canvas-lms/live_events.md at live-events-documentation · robert-carroll/canvas-lms · GitHub

I've spent a decent amount of time trying to track the changes in the Canvas Github commits to keep the code up to date. On the off chance that I miss something in the code here are the exception handlers...

New Events... or unidentified events the code wasn't expecting. If a new event is sent to the SQS stream and the code isn't ready for it we'd either throw an error or (because I'm using a Case statement) would completely ignore it. When this happens I instead push that event to a secondary SQS queue (mine is called -moo, because cows have multiple bellies and I'm a nerd). This allows me to catch the event, evaluate it, and update the code. I can also log the payload to a local file.

Field Count... sometimes new fields appear in the event the code wasn't expecting, and I use this method (which is written to an error file) to evaluate the field, assess its data type and update the schema and the code. Otherwise the code would quietly continue working and never add that data to the database.

---

One of the things I've tried to do is prevent myself from making assumptions for the community where we'd probably benefit from collaborating on best practices. Currently, I push all events into their own table, which makes it easier to join data or update data with Canvas Data... but just yesterday I added a new method which grabs common fields in all events and pushes them to a live_stream table, which I use for user activity. This allows me to create faster views when trying to associate things in Canvas data for Users vs Records... joins are slow, so I currently feel like the speed benefit is worth the duplication. Also since Canvas Data will pick up most of this information within 48 hours... we probably don't need to store it for too long. But I haven't found how long I should hold on to it yet...

If you are already using Canvas Data, a lot of the events can be appended to reports to giving you the most updated submission timestamp or activity for a user, this helps extend Canvas Data since your last dump/import.

One thing we've been working on is displaying current number of users who are online and eliminating noise from the Requests Table which I... The Problem with the Requests Table. I can easily eliminate Amazon Data Centers (backend Canvas tasks) from current user activity. I have not quite figured out yet how to eliminate all noise from the table... but not entirely sure now if that's even necessary. I had originally asked for an IP address in the Login Event... but with IP in almost all events... the Requests Table starts becoming more useful for troubleshooting DevOps and random questions CD won't answer like this and this, not user activity...

User locations in the last 2 hours... (I append geo location data to this and view it in Tableau)

Last but not least, with Canvas Data... since we have over 350 schools. I can use the context_id from the event, get the course_id then the account_id and list the current users in Canvas at each School.

That's just who and how many data... Live Events is extremely useful in allowed applications and processes to act in real time instead of off old Canvas Data or static Account Reports.

If you already have Live Events, we can possibly start collaborating before I push the code publically.

Please feel free to ask any questions or DM me.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-14-2018

03:32 PM

carroll-ccsd- your excitement doesn't come through in the slightest - just kidding. I'm glad it's coming together and wish I could have helped more in the development (or at least the testing), I just haven't had the time and we're not at a place where we can use it yet.

The machine they got me for the my Canvas Data server kept locking up and they just put a bigger power supply in it today, so hopefully that will make the machine stable. On the bright side, although I haven't been able to do much with Canvas Data on it yet, I've been using it to scan 1.5 months of emails trying to cut down on the amount of spam we're getting.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-17-2018

11:14 AM

Canvas Data definitely requires a beefier box. I'm lucky that ours is a VM and I can just ask for more resources when necessary. Spam now?! You wear so many hats!

Canvas Data definitely requires a beefier box. I'm lucky that ours is a VM and I can just ask for more resources when necessary. Spam now?! You wear so many hats!

@bneporadny , DM'd me Friday and we carried on a little about the difficulties of processing events and I shared some examples. He's currently getting the Caliper format, which I keep neglecting now that I have Canvas Raw. Anyway, I was working on the house this weekend (finally finishing the baby's room with casings, baseboard and crown molding) and rethinking this thread and decided I hate really_long_underscore_notation. Since, the easiest way for me to get Caliper into SQL so far was flattening the JSON and importing the key as the column, which left me with the following mess for a table structure. This morning, I've modified it a little and added _squish method.

CREATE TABLE dbo.ims_asset_accessed (

_context varchar(255) NULL,

id varchar(255) NULL,

[type] varchar(255) NULL,

actor_id varchar(255) NULL,

actor_type varchar(255) NULL,

actor_extensions_com_instructure_canvas_user_login varchar(255) NULL,

actor_extensions_com_instructure_canvas_root_account_id varchar(255) NULL,

actor_extensions_com_instructure_canvas_root_account_lti_guid varchar(255) NULL,

actor_extensions_com_instructure_canvas_root_account_uuid varchar(255) NULL,

actor_extensions_com_instructure_canvas_entity_id varchar(255) NULL,

[action] varchar(255) NULL,

object_id varchar(255) NULL,

object_type varchar(255) NULL,

object_name varchar(255) NULL,

object_extensions_com_instructure_canvas_asset_type varchar(255) NULL,

object_extensions_com_instructure_canvas_entity_id varchar(255) NULL,

eventtime varchar(255) NULL,

edapp_id varchar(255) NULL,

edapp_type varchar(255) NULL,

group_id varchar(255) NULL,

group_type varchar(255) NULL,

group_extensions_com_instructure_canvas_context_type varchar(255) NULL,

group_extensions_com_instructure_canvas_entity_id varchar(255) NULL,

membership_id varchar(255) NULL,

membership_type varchar(255) NULL,

membership_member_id varchar(255) NULL,

membership_member_type varchar(255) NULL,

membership_organization_id varchar(255) NULL,

membership_organization_type varchar(255) NULL,

membership_roles varchar(255) NULL,

session_id varchar(255) NULL,

session_type varchar(255) NULL,

extensions_com_instructure_canvas_hostname varchar(255) NULL,

extensions_com_instructure_canvas_request_id varchar(255) NULL,

extensions_com_instructure_canvas_user_agent varchar(255) NULL,

extensions_com_instructure_canvas_version varchar(255) NULL,

object_extensions_com_instructure_canvas_asset_subtype varchar(255) NULL,

actor_extensions_com_instructure_canvas_real_user_id varchar(255) NULL

);I think it needs a little improvement on structure. Sharing in case any Rubyist's can improve it.

def _flatten(hash, recursive_key = '')

hash.each_with_object({}) do |(k, v), ret|

key = recursive_key + k.to_s

key = key.gsub(/[^a-zA-Z]/, '_')

if v.is_a? Hash

ret.merge! _flatten(v, key + '_')

else

ret[key] = v

end

end

end

def _squish(hash)

hash = _flatten(hash)

hash.each_with_object({}) do |(k, v), ret|

k = k.gsub(/extensions|com|instructure|canvas/, '').gsub(/_+/, '_').gsub(/^_/, '').downcase

ret[k] = v

end

endWhich resulted in a much nicer DDL. Now, I can start writing code that isn't dynamic for Caliper, which would allow me to pass those to a production environment... and Ledbelly would be able to handle either or both formats simultaneously. Sometimes just saying something out loud makes you realize there's a better way.

CREATE TABLE dbo.ims_asset_accessed (

context varchar(255) NULL,

id varchar(255) NULL,

[type] varchar(255) NULL,

actor_id varchar(255) NULL,

actor_type varchar(255) NULL,

actor_user_login varchar(255) NULL,

actor_root_account_id varchar(255) NULL,

actor_root_account_lti_guid varchar(255) NULL,

actor_root_account_uuid varchar(255) NULL,

actor_entity_id varchar(255) NULL,

actor_real_user_id varchar(255) NULL,

[action] varchar(255) NULL,

object_id varchar(255) NULL,

object_type varchar(255) NULL,

object_name varchar(255) NULL,

object_asset_type varchar(255) NULL,

object_entity_id varchar(255) NULL,

eventtime varchar(255) NULL,

edapp_id varchar(255) NULL,

edapp_type varchar(255) NULL,

group_id varchar(255) NULL,

group_type varchar(255) NULL,

group_context_type varchar(255) NULL,

group_entity_id varchar(255) NULL,

membership_id varchar(255) NULL,

membership_type varchar(255) NULL,

membership_member_id varchar(255) NULL,

membership_member_type varchar(255) NULL,

membership_organization_id varchar(255) NULL,

membership_organization_type varchar(255) NULL,

membership_roles varchar(255) NULL,

session_id varchar(255) NULL,

session_type varchar(255) NULL,

hostname varchar(255) NULL,

request_id varchar(255) NULL,

user_agent varchar(255) NULL,

version varchar(255) NULL,

object_asset_subtype varchar(255) NULL

);- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-20-2018

04:11 PM

For Caliper Format:

Today, using the method above to flatten and squish the column names I was able to generate the code that explicitly sets the fields and data types for each event and removing redundant (shared) fields. I think I consumed about 250K caliper events and finally stopped getting notified that new tables and columns were being generated.

Tomorrow I'll write the DDL scripts for Caliper.

As I've stated, I intend to share the entire source at an appropriate time. But @bneporadny asked about 'best practices' too... so I'm sharing to for discourse.

Next I will be defining the datatypes as I see them.

Caliper format shares and reuses the same naming for almost all event fields. This code format (which is the same for my Raw Format code) would allow us or an institution to get the data as is, while also having the option to change their schema and the fields below to be more recognizable. We'll also have to establish whether fields like object_id are cleaned up in the code or left as is...

urn:instructure:canvas:course:10000000123

urn:instructure:canvas:attachment:1000000123

# handle ims caliper

shared = {

action: data['action'].nil? ? nil : data['action'].to_s,

actor_entity_id: data['actor_entity_id'].nil? ? nil : data['actor_entity_id'].to_s,

actor_id: data['actor_id'].nil? ? nil : data['actor_id'].to_s,

actor_real_user_id: data['actor_real_user_id'].nil? ? nil : data['actor_real_user_id'].to_s,

actor_root_account_id: data['actor_root_account_id'].nil? ? nil : data['actor_root_account_id'].to_s,

actor_root_account_lti_guid: data['actor_root_account_lti_guid'].nil? ? nil : data['actor_root_account_lti_guid'].to_s,

actor_root_account_uuid: data['actor_root_account_uuid'].nil? ? nil : data['actor_root_account_uuid'].to_s,

actor_type: data['actor_type'].nil? ? nil : data['actor_type'].to_s,

actor_user_login: data['actor_user_login'].nil? ? nil : data['actor_user_login'].to_s,

edapp_id: data['edapp_id'].nil? ? nil : data['edapp_id'].to_s,

edapp_type: data['edapp_type'].nil? ? nil : data['edapp_type'].to_s,

eventtime: data['eventtime'].nil? ? nil : data['eventtime'].to_s,

group_context_type: data['group_context_type'].nil? ? nil : data['group_context_type'].to_s,

group_entity_id: data['group_entity_id'].nil? ? nil : data['group_entity_id'].to_s,

group_id: data['group_id'].nil? ? nil : data['group_id'].to_s,

group_type: data['group_type'].nil? ? nil : data['group_type'].to_s,

hostname: data['hostname'].nil? ? nil : data['hostname'].to_s,

id: data['id'].nil? ? nil : data['id'].to_s,

job_id: data['job_id'].nil? ? nil : data['job_id'].to_s,

job_tag: data['job_tag'].nil? ? nil : data['job_tag'].to_s,

membership_id: data['membership_id'].nil? ? nil : data['membership_id'].to_s,

membership_member_id: data['membership_member_id'].nil? ? nil : data['membership_member_id'].to_s,

membership_member_type: data['membership_member_type'].nil? ? nil : data['membership_member_type'].to_s,

membership_organization_id: data['membership_organization_id'].nil? ? nil : data['membership_organization_id'].to_s,

membership_organization_type: data['membership_organization_type'].nil? ? nil : data['membership_organization_type'].to_s,

membership_roles: data['membership_roles'].nil? ? nil : data['membership_roles'].to_s,

membership_type: data['membership_type'].nil? ? nil : data['membership_type'].to_s,

object_id: data['object_id'].nil? ? nil : data['object_id'].to_s,

object_entity_id: data['object_entity_id'].nil? ? nil : data['object_entity_id'].to_s,

object_name: data['object_name'].nil? ? nil : data['object_name'].to_s,

object_type: data['object_type'].nil? ? nil : data['object_type'].to_s,

request_id: data['request_id'].nil? ? nil : data['request_id'].to_s,

session_id: data['session_id'].nil? ? nil : data['session_id'].to_s,

session_type: data['session_type'].nil? ? nil : data['session_type'].to_s,

type: data['type'].nil? ? nil : data['type'].to_s,

user_agent: data['user_agent'].nil? ? nil : data['user_agent'].to_s,

version: data['version'].nil? ? nil : data['version'].to_s,

}.compact

case event_name

when 'asset_accessed'

specific = {

object_asset_type: data['object_asset_type'].nil? ? nil : data['object_asset_type'].to_s,

object_asset_subtype: data['object_asset_subtype'].nil? ? nil : data['object_asset_subtype'].to_s,

}

when 'assignment_created'

specific = {

object_datecreated: data['object_datecreated'].nil? ? nil : data['object_datecreated'].to_s,

object_maxscore_numberstr: data['object_maxscore_numberstr'].nil? ? nil : data['object_maxscore_numberstr'].to_s,

object_description: data['object_description'].nil? ? nil : data['object_description'].to_s,

object_lock_at: data['object_lock_at'].nil? ? nil : data['object_lock_at'].to_s,

object_datetoshow: data['object_datetoshow'].nil? ? nil : data['object_datetoshow'].to_s,

object_datetosubmit: data['object_datetosubmit'].nil? ? nil : data['object_datetosubmit'].to_s,

}- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-21-2018

09:38 AM

Would really love any thoughts here.

Caliper format pushes me to want to make decisions that I feel require popular vote.

There is just too much here that I'd prefer to trim and cast, but that might not be what others would do.*

Here's the Caliper submssion_created_table...

especially, all the urn:instructure:canvas:

IF OBJECT_ID('dbo.ims_submission_created', 'U') IS NOT NULL DROP TABLE dbo.ims_submission_created;

IF NOT EXISTS (SELECT * FROM sysobjects WHERE name='ims_submission_created' AND xtype='U')

CREATE TABLE dbo.ims_submission_created (

-- created

[submission_created_id] BIGINT IDENTITY(1,1) PRIMARY KEY,

[processed_at] DATETIME,

[event_time_local] DATETIME,

--

[action] VARCHAR(255), -- (9) Submitted

[actor_entity_id] VARCHAR(255), -- (15) 260000001234567

[actor_id] VARCHAR(255), -- (43) urn:instructure:canvas:user:260000001234567

[actor_root_account_id] VARCHAR(255), -- (15) 260000000000001

[actor_root_account_lti_guid] VARCHAR(255), -- (40) tXm2ckBmgwurxttsdxTIHiCQ1ZlSGTwkyWVClxY3

[actor_root_account_uuid] VARCHAR(255), -- (40) tXm2ckBmgwurxttsdxTIHiCQ1ZlSGTwkyWVClxY3

[actor_type] VARCHAR(255), -- (6) Person

[actor_user_login] VARCHAR(255), -- (4) STNICK

[context] VARCHAR(255), -- (42) http://purl.imsglobal.org/ctx/caliper/v1p1

[edapp_id] VARCHAR(255), -- (25) http://www.canvaslms.com/

[edapp_type] VARCHAR(255), -- (19) SoftwareApplication

[eventtime] VARCHAR(255), -- (24) 2018-12-21T15:18:57.000Z

[group_context_type] VARCHAR(255), -- (6) Course

[group_entity_id] VARCHAR(255), -- (15) 260000001342134

[group_id] VARCHAR(255), -- (45) urn:instructure:canvas:course:260000001342134

[group_type] VARCHAR(255), -- (14) CourseOffering

[hostname] VARCHAR(255), -- (20) ccsd.instructure.com

[id] VARCHAR(255), -- (45) urn:uuid:fffa9f91-3687-4fbc-8fb1-5a2efe38d630

[job_id] VARCHAR(255), -- (16) 1010019823628138

[job_tag] VARCHAR(255), -- (53) Services::SubmitHomeworkService::SubmitWorker#perform

[membership_id] VARCHAR(255), -- (69) urn:instructure:canvas:course:260000001342134:Learner:260000005600682

[membership_member_id] VARCHAR(255), -- (43) urn:instructure:canvas:user:260000005715137

[membership_member_type] VARCHAR(255), -- (6) Person

[membership_organization_id] VARCHAR(255), -- (45) urn:instructure:canvas:course:260000001342134

[membership_organization_type] VARCHAR(255), -- (14) CourseOffering

[membership_roles] VARCHAR(255), -- (7) Learner

[membership_type] VARCHAR(255), -- (10) Membership

[object_assignable_id] VARCHAR(255), -- (49) urn:instructure:canvas:assignment:260000007654321

[object_assignable_type] VARCHAR(255), -- (25) AssignableDigitalResource

[object_assignee_id] VARCHAR(255), -- (43) urn:instructure:canvas:user:260000001234567

[object_assignee_type] VARCHAR(255), -- (6) Person

[object_body] VARCHAR(255), -- (255) html

[object_count] VARCHAR(255), -- (1) 4

[object_datecreated] VARCHAR(255), -- (24) 2018-12-21T15:18:57.000Z

[object_entity_id] VARCHAR(255), -- (15) 260000087654321

[object_id] VARCHAR(255), -- (49) urn:instructure:canvas:submission:260000087654321

[object_submission_type] VARCHAR(255), -- (10) online_url

[object_type] VARCHAR(255), -- (7) Attempt

[object_url] VARCHAR(255), -- (28) https://youtu.be/abcdef

[request_id] VARCHAR(255), -- (36) fffd5b21-6a1f-4b75-a189-796421756ce5

[session_id] VARCHAR(255), -- (63) urn:instructure:canvas:session:ffe672636961372ad62aa7652eb6cec1

[session_type] VARCHAR(255), -- (7) Session

[type] VARCHAR(255), -- (15) AssignableEvent

[user_agent] VARCHAR(255), -- (16) OAuth gem v0.4.7

[version] VARCHAR(255), -- (5) 1.0.0

);*Fortunately, I don't use the Caliper format, so I can just leave it to be defined by the user later. :smileygrin:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-21-2018

08:57 PM

Caliper works for other things besides Canvas. Is it possible that people will be getting Caliper notices from them that would go into this table as well? If so, then you probably shouldn't shorten anything.

Beyond that, I don't have much in the way of thoughts.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-26-2018

09:43 AM

My program is specific to Canvas Live Events because of the event names. Caliper standardizes the event data and the event type, but I think the LMS still gets to determine the event_name attribute sent with the SQS message, or if it's sent at all. This is more obvious with Quizzes.Next events than events like submission_created. While the script can parse any Caliper event, the scripts triggers would probably have to be setup for the LMS sending the events. I won't be supporting that... but anyone could technically fork it for their purposes.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-08-2019

10:33 PM

Hi all - sorry to be replying late to this awesome thread! Didn't see if before the holidays...

We have worked with a client to pull Canvas live events in Caliper format from SQS into Kinesis firehose where it is flattened and moved into AWS S3, which is then loaded via AWS Glue into a table in Redshift. The table structure is similar to what @carrollrw came up with - and similar processes are also in place to feed Caliper event data from other sources into the same table. (We added a source column in the table to distinguish between them.) The interesting part has been interpreting the event data based on the event type and also correlating events coming in from both Canvas as well as the tools which are launched from Canvas (and feed Caliper events to the same table).

Sorry I don't have as thorough of a writeup as others here! I'm happy to get on a call to talk more about our experiences if that would help -

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-09-2019

11:34 AM

Hi @lfeng1 ,

That sounds like a nice and efficient setup! I recently went to AWS:ReInvent and took a couple of the Big Data workshops which was extremely useful in learning how I could move our data to more powerful tools. I'm jealous of anyone who can utilize them, because the AWS services make it fast and fun. It's the cost that keeps us off that playground.

I've worked out this solution for those who can't afford anything more than what is likely existing technical services at their institution. My hope is to show progress with this data and prove we need budgeting for services like you and AWS offer. :smileygrin:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-17-2018

01:51 PM

Hi @bneporadny ,

carroll-ccsd has definitely done a great deal of work on this. There are two different types of data you can subscribe to - caliper and canvas raw data. The canvas raw data looks almost exactly like the data already in canvas api and canvas data. I've only worked with submissions and there is a lot to look at as they come in.

Right now my database table is set up like this...I actually pull in the metadata and body into two longtext fields just so I can learn what's in it and discover where any data problems may occur that might cause it to not populate some of the fields. I figure as I discover what's in there, I can stop bringing in the body and metadata fields.

My initial goal with Canvas Data is to be able to get more frequent and almost real-time information about submissions in order to get the most accurate last_activity_date possible for financial aid Last Attendance Date and for flagging students for our Early Alert system.

Table: aws_submission

Columns:

| event_name | varchar(255) |

| event_time | varchar(255) |

| submission_id | varchar(255) |

| submission_type | varchar(255) |

| assignment_id | varchar(255) |

| submitted_at | varchar(255) |

| updated_at | varchar(255) |

| real_user_id | varchar(255) |

| user_login | varchar(255) |

| user_sis_id | varchar(255) |

| user_id_submission | varchar(255) |

| user_id_md | varchar(255) |

| lti_user_id | varchar(255) |

| context_type | varchar(255) |

| context_id | varchar(255) |

| group_id | varchar(255) |

| context_role | varchar(255) |

| attempt | varchar(255) |

| score | varchar(255) |

| grade | varchar(255) |

| hostname | varchar(255) |

| request_id | varchar(255) |

| session_id | varchar(255) |

| user_agent | longtext |

| job_id | varchar(255) |

| job_tag | varchar(255) |

| body_text | longtext |

| url | varchar(255) |

| lti_assignment_id | varchar(255) |

| metadata | longtext |

| body | longtext |

| producer | varchar(255) |

Not sure if that helps - I'm happy to walk you through the scripts that I came up with to get the stuff from AWS and pull it into my database.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-17-2018

02:48 PM

I see you've caught that, for some* events the user_id in metadata and body can be different. The pattern seems to be that the user_id in body is who the record belongs to, while the metadata user_id is the user performing the action.

* These events:

enrollment_created

enrollment_updated

grade_change

submission_created

submission_updated

user_account_association_created

user_created

user_updated

I'm also wondering if are you creating any constraints or indexes for data at this point? I'm not there yet... but thought I'd share these ideas.

First, I create 2 additional columns: processed_at and event_time_local, just before insert... this saves me from having to do really expensive timezone conversions in SQL. While the event_time doesn't currently have microsecond positions, the Roadmap states they will be adding it. I use processed_at and event_time_local to determine the latency, in the our dashboard.

Additionally, the DDL creates a sequenced primary key on insert.

-- mssql

-- live_submission_created

CREATE TABLE dbo.live_submission_created (

-- created

[submission_created_id] BIGINT IDENTITY(1,1) PRIMARY KEY,

[processed_at] DATETIME,

[event_time_local] DATETIME,

-- metadata

[client_ip] VARCHAR(39),

[context_id] BIGINT,

[context_role] VARCHAR(24),

[context_type] VARCHAR(24),

[event_name] VARCHAR(18),

[event_time] DATETIME,

[hostname] VARCHAR(64),

[job_id] BIGINT,

[job_tag] VARCHAR(100),

[producer] VARCHAR(12),

[real_user_id] BIGINT,

[request_id] VARCHAR(36),

[root_account_id] BIGINT,

[root_account_lti_guid] VARCHAR(40),

[root_account_uuid] VARCHAR(40),

[session_id] VARCHAR(32),

[user_account_id] BIGINT,

[user_agent] VARCHAR(255),

[user_login] VARCHAR(64),

[user_sis_id] VARCHAR(32),

-- body

[submission_id] BIGINT,

[assignment_id] BIGINT,

[user_id] BIGINT,

[submitted_at] DATETIME,

[lti_user_id] VARCHAR(40),

[graded_at] DATETIME,

[updated_at] DATETIME,

[score] FLOAT(53),

[grade] VARCHAR(70),

[submission_type] VARCHAR(24),

[body] NVARCHAR(MAX),

[url] VARCHAR(MAX),

[attempt] INT,

[lti_assignment_id] VARCHAR(36),

[group_id] INT,

);or mysql...

-- mysql

-- live_submission_created

CREATE TABLE live_events.live_submission_created (

-- created

`submission_created_id` BIGINT NOT NULL AUTO_INCREMENT,

`processed_at` DATETIME,

`event_time_local` DATETIME,

-- metadata

`client_ip` VARCHAR(39),

`context_id` BIGINT,

`context_role` VARCHAR(24),

`context_type` VARCHAR(24),

`event_name` VARCHAR(18),

`event_time` DATETIME,

`hostname` VARCHAR(64),

`job_id` BIGINT,

`job_tag` VARCHAR(100),

`producer` VARCHAR(12),

`real_user_id` BIGINT,

`request_id` VARCHAR(36),

`root_account_id` BIGINT,

`root_account_lti_guid` VARCHAR(40),

`root_account_uuid` VARCHAR(40),

`session_id` VARCHAR(32),

`user_account_id` BIGINT,

`user_agent` VARCHAR(255),

`user_login` VARCHAR(64),

`user_sis_id` VARCHAR(32),

-- body

`submission_id` BIGINT,

`assignment_id` BIGINT,

`user_id` BIGINT,

`submitted_at` DATETIME,

`lti_user_id` VARCHAR(40),

`graded_at` DATETIME,

`updated_at` DATETIME,

`score` FLOAT(53),

`grade` VARCHAR(70),

`submission_type` VARCHAR(24),

`body` TEXT CHARACTER SET utf8mb4 COLLATE utf8mb4_unicode_ci,

`url` TEXT CHARACTER SET utf8mb4 COLLATE utf8mb4_unicode_ci,

`attempt` INT,

`lti_assignment_id` VARCHAR(36),

`group_id` INT,

PRIMARY KEY (`submission_created_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4 COLLATE utf8mb4_unicode_ci;- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-19-2018

03:46 PM

Got the Oracle dev environment I needed. LEDbelly is generating an Oracle schema for Caliper and Canvas formats. :smileygrin:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-09-2019

11:27 AM

Hi folks,

The LTI uses a combination of Canvas Data and Live Events, filling in gaps where Canvas data has older records.

I'd like to organize a small group of users who are interested in collaborating and polishing the project (LEDBelly, not the LTI) for community use. We could test the code across organizations, database environments and figure out some common features and fix some bugs when we find them. If you are interested please DM me. Once I get a short list we'll work out a time for webex (or whoever can offer this space), and I'll post the code to a private Github repository while we work out the kinks. We can work out issues so that it is available when Canvas releases their new changes.

It would be helpful if those interested in this phase are:

Currently receiving either Canvas Raw or Caliper format events, or both.

Understand or can install and and use Ruby code and dependencies. The code is intentionally basic.

MSSQL, MySQL, PostgreSQL, or Oracle database environments.

I'd love to work with anyone who's especially talented at Indexing and Query Execution Plans. The Query used to display the information on the above screenshot utilizes a couple of views with Joins on CD/LE, and Stored Procedure with Temp Table use and additional Joins. With a WHERE clause in the temp table for course_id, the query takes about 3-8 seconds to load. I'd like to be able to utilize this data on larger sets and not have it take 60+ seconds... :smileygrin:

I'd also like to work on a Rakefile to automate the configuration setup. Not that the setup is hard, but we could probably programmatically automate and extend some features:

I duplicate all events (with common columns) into a 'live_stream' table, to prevent massive joins on dozens of tables. I use this table more than the individual tables. Others might want to configure this differently.

I have another program that's 1 file which collects and stores the GEO location data from the IP address... do we want to include this as a sub routine, or do people even want that?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-10-2019

07:51 AM

I am definitely still interested in helping with and collaborating on this. I feel like this could definitely be a game changer in our ability to get real time data from Canvas instead of being 24 to 36 hour delay like we have currently.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-04-2019

02:37 PM

GitHub - ccsd/ledbelly: LEDbelly - Live Events Daemon for Canvas LMS - SQS to SQL

Would appreciate any feedback or contributions.

If you use this code, please let me know.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-16-2019

02:14 PM

I'm working in U/T. We also use Canvas, so I will try it.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-21-2019

08:42 AM

After running the "rake create_tables" there are some SQL scripts in /ledbelly/sql/ddl directory. I should run those SQL script manually, right?

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-21-2019

08:55 AM

Mostly. Essentially, yes... you have to run those scripts, but review them first. The rake create_tables task, is a best effort (my best effort) to get a DDL generated for each of the supported databases. I was able to create the database with each of the generated scripts and push events to those tables. Your specific environment might require some tweaks to the DDL. For Instance, while the script pulls the database name from the config, I (for SQL Server) have add 'dbo' into the schema files so each reads 'database.dbo.table_name'. Please file an Issue or pull request if you find problems.

I'll put up a discussion for LED soon.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-03-2019

01:35 PM

I have made your LedBelly program worked well in our Canvas developing environment(we use MySQL). It takes the Live event(Canvas Raw) from SQS of AWS and imports into MySQL. Thank you for your excellent work.

I noticed that in Canvas Release Notes (2019-09-21) there are some changes to the Live event : "All events are being compressed to allow for smaller payloads. All data elements with null values will be omitted from events payloads". These changes will be effect from Dec 21, 2019. Is your program going to be affected by them, or going to fix your program and make it fit in those changes?

Thanks a lot.

Luo

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-03-2019

02:37 PM

This specific change will not affect LEDbelly, and is already built in by default in how LED is written. At the time of development (even today) there is no definitive schema of the LE messages to validate against. I'm pretty confident that my 2 schema files are the most accurate [up-to-date] definition of the columns available in each event, even more [updated] than what's documented on the community or the API documentation. The problem is that as new scenarios are triggered, more values and more columns are populated for existing events. Here is an example commit from this morning, schema updates @4dfcdd3, adding 2 columns that recently got data and updating an existing column because it got a new value we hadn't seen. All this to say that... everything is expected to be NULL, LED will only care about what is sent. Furthermore, LED uses Ruby#compact to trim null metadata values, which is the same method that Canvas has used to remove nulls and... Compact live event payload · instructure/canvas-lms@da7a26a · GitHub. I've debated about using #compact on the hash before DB insert... but haven't settled on that yet.

I would love to chat more about your use of MySQL. Particularly, I'd like to fill in some blanks for database support. ledbelly/ledbelly_settings.rb at master · ccsd/ledbelly · GitHub Is that something we can work on together?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-04-2019

09:45 AM

Hi Robert,

I’m glad if I can do something for this program, but I’m just a LINUX system admin, not a developer. Or purpose is to do further study and analysis to Canvas data. I’ll do my best to assist you if you need me.

Thanks.

Luo

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-03-2019

03:16 PM

I've made another thread for discussing the specifics of LEDbelly here, LEDbelly - Live Events Daemon for Canvas LMS - SQS to SQL

Additionally, I've placed a bug bounty on the topic for anyone who'd like to tackle this question using a different solution. See https://community.canvaslms.com/docs/DOC-17354-awesome-canvaslms#CanvasLiveEvents

Bug bounty of 2,500 community badge points for the following blog posts and documentation:An AWS guide or CloudFormation Stack for Live Events consumption as described by:

Linda Feng here Getting SQS message to SQL Database #comment-130997

Mike Sharkey Here

as Colin Murtaugh has done for Canvas Data with Build a Canvas Data Warehouse on AWS in 30 minutes! OR, document and provide an example of SQS to SQL with Fluentd

Linda Feng here Getting SQS message to SQL Database #comment-130997

Mike Sharkey Here

as Colin Murtaugh has done for Canvas Data with Build a Canvas Data Warehouse on AWS in 30 minutes! OR, document and provide an example of SQS to SQL with Fluentd

Community help

Community help

To interact with Panda Bot, our automated chatbot, you need to sign up or log in:

Sign inView our top guides and resources:

Find My Canvas URL Help Logging into Canvas Generate a Pairing Code Canvas Browser and Computer Requirements Change Canvas Notification Settings Submit a Peer Review AssignmentTo interact with Panda Bot, our automated chatbot, you need to sign up or log in:

Sign in

This discussion post is outdated and has been archived. Please use the Community question forums and official documentation for the most current and accurate information.