Getting SQS message to SQL Database

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We are beginning to look at using #canvas live events and am wondering if there is anyone out there currently using them and could provide us with some best practices of how to use the events and getting the event message data out of the Amazon SQS and into a SQL database table where you can actually use the data from the message to produce reports.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @bneporadny ,

TLDR; can you tell we're excited about this! :smileygrin:

I have been working on this solution for about 6 months now. I have designed it to be shared with the Canvas Community and it has gone through several iterations. I recently got over a few hurdles after working with Canvas on some details and then they shifted some resources to improving Live Events, and if you look at the Roadmap are working on releasing some of those improvements over the next few months. Some of those improvements are to handle an increased volume of schools deciding to use Live Events and how they can consume them (SQS or HTTPS), subscribe to them (in the UI) and changing which to use at the UI level (Caliper vs Canvas Raw). Currently Canvas has to have an engineer set it up for each instance. Because of these shifts and improvements, I told them I'd hold off on releasing the code to prevent an influx of requests before they had everything in place.

With that said... I was planning on a more formal release, blog post and explanation of the code early next year, but would be happy to share what I've been working on and why. So...

I was listening to Huddie Ledbetter aka Leadbelly's blues music one morning when I decided that was a fantastic name for my Live Events Daemon. LEDbelly, as I've come to call it, is a lightweight daemon that runs on a system (currently my Mac Mini while I build our new task server), connects to Amazon SQS, consumes messages for either Canvas Raw or Caliper format, and can push them into SQL.

Because I've used numerous community contributions over the years to help run our processes, including the many contributions of @James ... and when I was working on this and found few resources and mentions of Live Events here, I was inspired to write the code with the intention of making it available to anyone.

Some of my choices around Canvas Community were asking what databases are used so that I could support them. I can currently push events into MSSQL, MySQL, PostgreSQL and would like to support Oracle, but am waiting on my dev environment to be setup and anyone (using Oracle) in the community to answer a few questions.

My intention in making this available:

There are better ways to handle this data... (Splunk, AWS)

There are schools with money who can build nice data lakes and process this data on AWS...

we don't have that funding or the skills, yet. many of us don't, this is for us!

Not everyone is a data analyst, programmer, or understands Big Data

For the rest of us, SQL is the only way to handle data... unstructured or not

If we all write our own programs we're all reinventing the wheel instead of improving it

I'm hopeful there is interest from the community to collaborate and build something we can all use...

... if not, it will be available as is (as I add to it) for your use or as a starter kit. I will be contributing this to our Github account early 2019 when Canvas can handle the increased volume and turning on LE for Canvas is easier.

Since you asked about best practices and how to use it, I'll share some details.

I will definitely be writing another article on this soon but would like to give you some features now.

Ledbelly

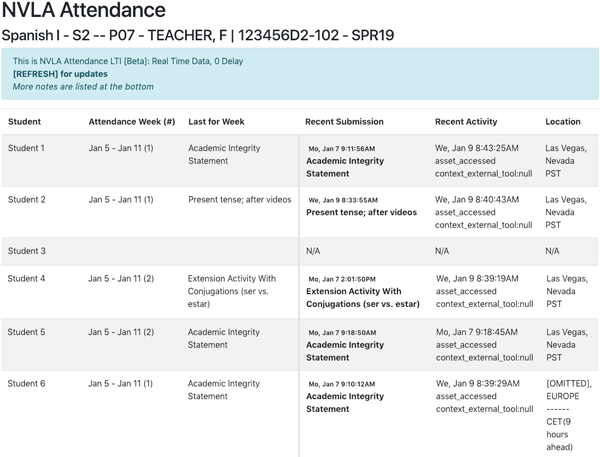

When not running in the background I can watch events happening in real time. The date is old because I tried to post a 60 second video last month but Twitter hated the format.

Multi-threaded daemon for consuming SQS messages quickly... Multi threading is necessary because sometimes you'll see one or two events a second, or a minute... during peak times many many more. This is especially necessary since multiple events happen at the same time, I found that importing 1 message at a time in SQL would quickly create a backup and latency as I couldn't insert them fast enough.

Here's a screen grab from our internal ops dashboard smithb_ccsd developed to keep an eye on all our in-out processes.

Connection pooling, just like multi threading, the database connection needs to be able to handle increases and decreases in the amount of transactions happening in the database.

The rest of the features are pretty basic. I chose the Canvas Raw Format vs the Caliper format because its compatible with Canvas Data and is easier to consume, and it has more events. Both can be consumed at the same time. The code is currently set up to import the Caliper Format into SQL with underscore notation and dynamically generate tables in a test environment... I do this to track changes and help answer questions that pop up. Thoughts on that here... https://community.canvaslms.com/docs/DOC-15741-event-type-by-format#comment-132790

For the Canvas Raw format, I have mapped the event fields, setup data types and have generated DDLs for the 3 databases I mentioned above and written code to dynamically generate those DDLs when I needed an update or to create documentation. Live Events changes quite often, just this week they added client_ip to many of the events. I've been trying to help update the documentation, by creating Pull Request #1379 with an update to the API doc...

canvas-lms/live_events.md at live-events-documentation · robert-carroll/canvas-lms · GitHub

I've spent a decent amount of time trying to track the changes in the Canvas Github commits to keep the code up to date. On the off chance that I miss something in the code here are the exception handlers...

New Events... or unidentified events the code wasn't expecting. If a new event is sent to the SQS stream and the code isn't ready for it we'd either throw an error or (because I'm using a Case statement) would completely ignore it. When this happens I instead push that event to a secondary SQS queue (mine is called -moo, because cows have multiple bellies and I'm a nerd). This allows me to catch the event, evaluate it, and update the code. I can also log the payload to a local file.

Field Count... sometimes new fields appear in the event the code wasn't expecting, and I use this method (which is written to an error file) to evaluate the field, assess its data type and update the schema and the code. Otherwise the code would quietly continue working and never add that data to the database.

---

One of the things I've tried to do is prevent myself from making assumptions for the community where we'd probably benefit from collaborating on best practices. Currently, I push all events into their own table, which makes it easier to join data or update data with Canvas Data... but just yesterday I added a new method which grabs common fields in all events and pushes them to a live_stream table, which I use for user activity. This allows me to create faster views when trying to associate things in Canvas data for Users vs Records... joins are slow, so I currently feel like the speed benefit is worth the duplication. Also since Canvas Data will pick up most of this information within 48 hours... we probably don't need to store it for too long. But I haven't found how long I should hold on to it yet...

If you are already using Canvas Data, a lot of the events can be appended to reports to giving you the most updated submission timestamp or activity for a user, this helps extend Canvas Data since your last dump/import.

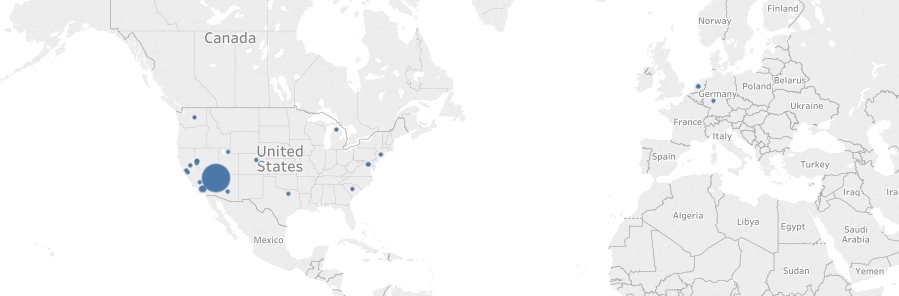

One thing we've been working on is displaying current number of users who are online and eliminating noise from the Requests Table which I... The Problem with the Requests Table. I can easily eliminate Amazon Data Centers (backend Canvas tasks) from current user activity. I have not quite figured out yet how to eliminate all noise from the table... but not entirely sure now if that's even necessary. I had originally asked for an IP address in the Login Event... but with IP in almost all events... the Requests Table starts becoming more useful for troubleshooting DevOps and random questions CD won't answer like this and this, not user activity...

User locations in the last 2 hours... (I append geo location data to this and view it in Tableau)

Last but not least, with Canvas Data... since we have over 350 schools. I can use the context_id from the event, get the course_id then the account_id and list the current users in Canvas at each School.

That's just who and how many data... Live Events is extremely useful in allowed applications and processes to act in real time instead of off old Canvas Data or static Account Reports.

If you already have Live Events, we can possibly start collaborating before I push the code publically.

Please feel free to ask any questions or DM me.

Canvas Data definitely requires a beefier box. I'm lucky that ours is a VM and I can just ask for more resources when necessary. Spam now?! You wear so many hats!

Canvas Data definitely requires a beefier box. I'm lucky that ours is a VM and I can just ask for more resources when necessary. Spam now?! You wear so many hats!