Submission_type in Submission_dim null value

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-14-2019

10:07 AM

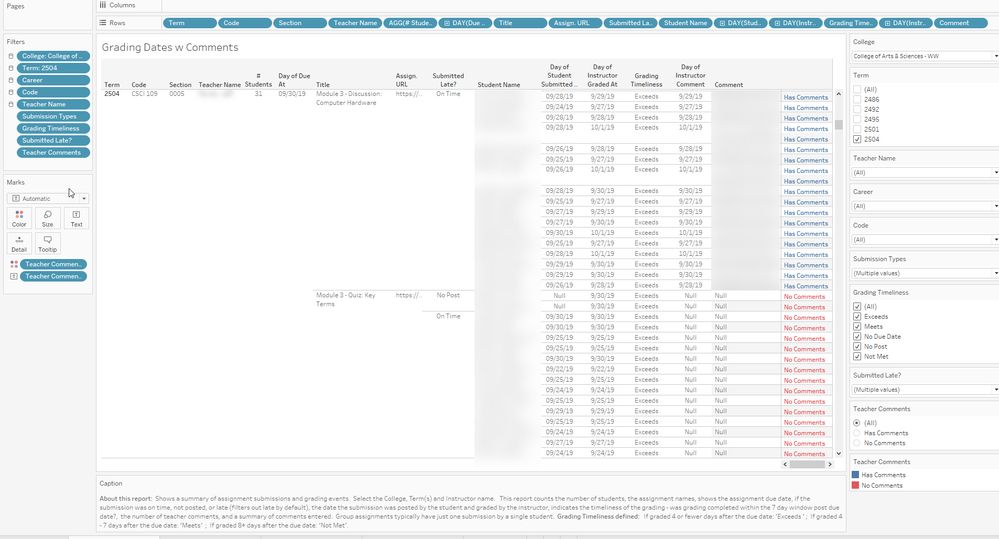

I am working on a report that counts the number of submissions in a Canvas course. When I run a count on the number of submission IDs (from submission_dim) in a course its larger than the number displayed in course statistics. I expanded on this more in Tableau and found that I have a large number of submissions with a null value in the submission_type column. When I remove that column from my count, I have the same number as in course statistics. Does anyone know why some submissions would get a null value in the submission_type? I checked several of these and they should be showing online_upload