A Major Step For Better Media Analytics

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

If you follow the Release Notes or already looked into the insights of one of your media in Studio today, chances are you noticed that something has considerably changed. We made this change in the belief that better understanding how students watch course videos directly correlates to student success in many respects. And there’s more: looking more ahead in the future, we reworked the underlying pipeline as well. Actually, a significant part of the change the Studio engineering team spent time with in the last few months is working behind the scenes. You won’t see it. The truth is that it is the foundation of any media analytics coming to Studio later.

But let’s not jump the gun. Let’s go through the redesigned page first.

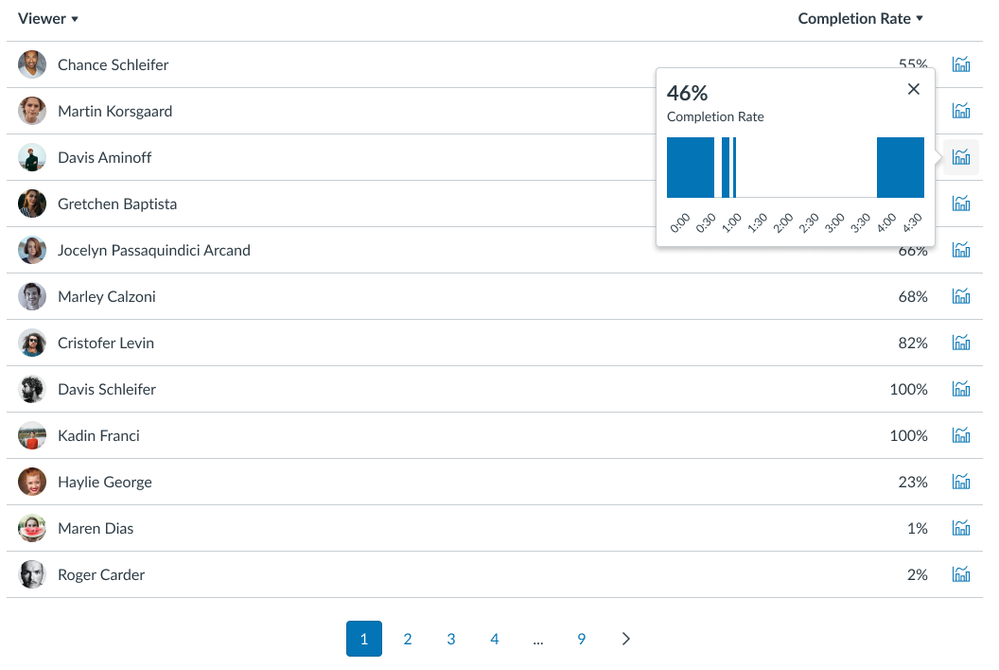

First, the previous representation of viewership data took unreasonable time to interpret it student by student. We did not change the way we structure this data. But we moved viewers to a table, extended it with completion rates and made it sortable by both columns. Had trouble finding students who missed course videos? Tick. Had trouble gathering those who watched less than 80% of each video so they may not pass the course? Tick. Or struggled with finding a certain student when looking at the insights of weekly announcement videos sent out to 400+ students? We covered it too!

Second, we know that in some cases teachers are glad to work with such reports using their own tools. Extract it, and you’ll have access to this data in a CSV format including a few more data on the viewers (role, email address).

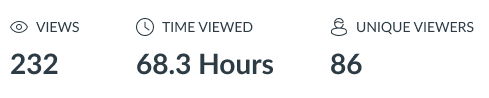

Third, usage metrics is key to understanding and comparing the consumption of different videos in a course but can also be crucial if such numbers must be reported to a body. Even though these metrics are only available for each media separately today, it will serve the basis of course level usage analytics in the future. Let me walk you through the three new metrics:

- Views: it describes the approximate number of times viewers have interacted with the selected video based on viewing patterns. Keep in mind that this is not based on an exact condition like Youtube saying that one view counts if someone watches a media for at least 30 seconds in one session. Educational materials are different. We’ll try to figure out the number of views based on viewing patterns. It’s a metric we’ll constantly look for feedback about

- Time Viewed: the total amount of time viewers spent watching the video

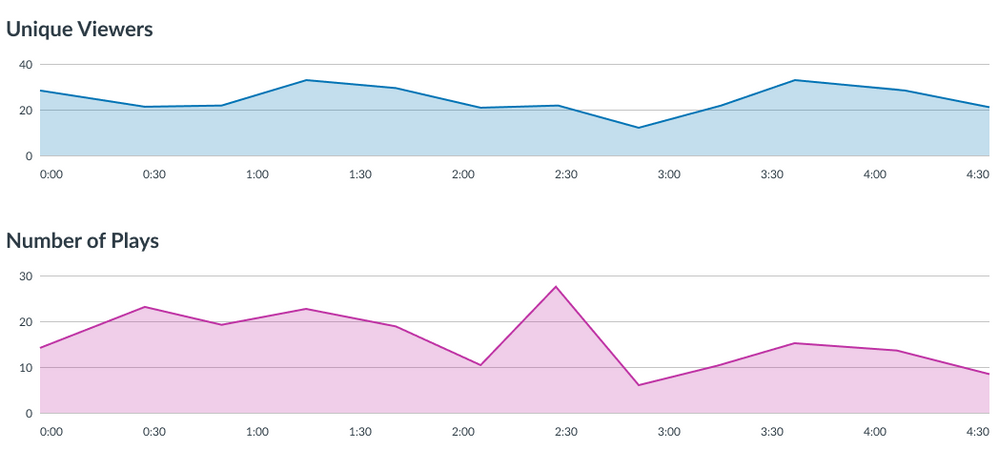

- Unique Viewers: A simple number showing the number of students who watched a video. Interpret this number together with the above two metrics and you’ll get a grasp on understanding how your video performs among students

Fourth, the chart on the number of plays. Some parts of a video might be consistently skipped as seemingly not relevant to students. Or the opposite, revisited multiple times if a complicated math equation is explained. This chart aims to give teachers an answer for the question how did students interact with a particular video? You will see peaks where students revisited it for some reason. You will see bottoms where students feel those parts are less important for their learning.

Frankly, this is a big change for us too. And there are still caveats we will address later. One of the first ones will be a filter for students with only active enrolments in the Canvas course. We are aware that viewership data of students remained here even after they completed a course, but that’s something we already have on our radar.

So why is this a big change for us?

Think about the last time you wanted to cross-check a student’s performance with their video usage patterns. Was her average completion rate of all the videos in the course below 30%? Or was it high and wanted to share positive feedback with her so that you could reinforce the habit of watching lecture videos? Or you wondered if you could build up your course in a different way so that students in general spend more time watching your presentations? Today, we can’t give you a straight off answer for these questions in Studio (well, you can still gather the necessary data with some manual effort). But answering most of these will be relying upon the newly introduced metrics, so we must be absolutely sure we make this right. For that very reason, this is not only a redesigned Insights page, but the foundation of student-level, course-level and account-level media analytics.

One more behind-the-scenes improvement is tracking usage in places with poor network coverage, rural areas as an example. In the past, it could happen that tracking viewership stopped when the device lost connection. Sometimes, it did not even continue for a particular video without refreshing the page. In January, the engineering team did some magic to gather offline viewership data from the browser and send it through once again the connection is intact. Yay!

Let us know what you think!

Akos

The content in this blog is over six months old, and the comments are closed. For the most recent product updates and discussions, you're encouraged to explore newer posts from Instructure's Product Managers.

The content in this blog is over six months old, and the comments are closed. For the most recent product updates and discussions, you're encouraged to explore newer posts from Instructure's Product Managers.