The Instructure Community will enter a read-only state on November 22, 2025 as we prepare to migrate to our new Community platform in early December. Read our blog post for more info about this change.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Show Average Score for Each Quiz Attempt

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-06-2021

07:23 PM

(I tried to post this earlier but I am not sure if it went through)

I have a quiz with two attempts set to take the average score. In quiz statistics, can I see the average score for each of the two attempts?

I read this in the guide: If a student had multiple assignment attempts, you can view past attempts in SpeedGrader. Quiz stats will only display the kept score for the student (highest score or latest score). To view the score setting for multiple attempts, edit your quiz and view the multiple attempts settings option. If necessary, you can give your students an extra attempt.

1. Does this mean that in "quiz statistics" it is only showing me either the highest score or the latest score?

2. I think the sentence "to view the score setting for multiple attempts..." answers my question, but I don't understand what the solution is - edit my quiz and view the multiple attempts settings option??

Thanks,

Russell

4 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-06-2021

10:01 PM

If I misunderstand your question please let me know.

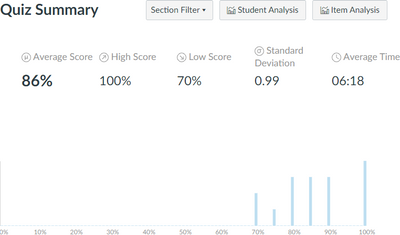

I take it to mean that you are asking about the average score that appears at the top of the Quiz Summary for a legacy quiz. That is, the 86% in the image below. Under "Section Filter", all sections are selected.

I set my scores to also be the average attempt, but I do not use the quiz statistics summary because I basically find it useless.

I downloaded the Student Analysis, where it gives me the score for every attempt for the students. I brought it into Excel, created a pivot table based on the student's name, and asked for the average score across all the attempts.

- When I used the mean score of all the students, my average score was 82.59%, not 86%.

- When I used the highest score of any of the attempts, my average score was 88.15%, not 86%.

- When I used the score from the last attempt, then my average score was 88.15%, not 86%, which means that everyone finished higher on their last attempt.

- When I used the score from the first attempt, then my average score was 77.59%, not 86%.

- When I used the score for every attempt, then my average score was 80%, not 86%

At this point, you may be thinking that Canvas is totally screwed up. Or perhaps that I did the screen shot for a different quiz than what I downloaded the results for (I didn't).

I moved on to the bar chart that says no one scored less than 70%. That is true if I look at the maximum score, but not the average score. For the average score, my lowest was 58.33%.

I then found a weighted average of the average scores, weighted by the number of attempts. The students had 45 attempts between them.

- The weighted average of the max score was 89%, not 86%. That is, if I replace every attempt by the average score and then find the mean, I get 89%.

- The weighted average of the average scores was 80%, not 86%. This is mathematically equivalent to finding the average for all attempts without weighting, but at this point I'm starting to doubt my math skills, so it serves as confirmation.

All of those had sample sizes of 27, but there are 30 students in my class. 3 students didn't take the quiz at all. I then went into the gradebook and exported the grades. When I looked at the results, I had 27 students who had taken it and 3 zeros.

- The average of the 30 grades (including the 0's) was 74.33% not 86%.

- The average of the 27 students with grades was 82.59%, not 86%. This is pretty close to the 82.59% that I had with the pivot table, but the numbers exported from the gradebook were rounded to 2 decimal places, so it was really 82.59259259% from the student analysis and 82.58888889% from the gradebook. Neither one is 86%, though.

- If I use all 45 attempts, but throw in the 0 for the three students who didn't take the exam, then I get 83.44%, not 86%.

Since I'm unable to match their results, I now go into the Developer Tools in Chrome and look at the information that is retrieved. It gets the values from the quiz statistics API. From that network call, I find out this information (the quiz was worth 10 points).

correct_count_average: 2.4375

duration_average: 378.1875

incorrect_count_average: 0

score_average: 8.625

score_high: 10

score_low: 7

score_stdev: 0.9921567416492215

scores: {70: 2, 75: 1, 80: 3, 85: 3, 90: 3, 100: 4}

unique_count: 16

Aha, the average is 86.25%, not 86%. But I still never got that number.

The weird thing is the unique count of 16. I have 27 students who took the quiz. What is that about? If I add up the counts for the scores, 2+1+3+3+3+4, I get 16.

If I find the weighted sum of their scores, I get 2*70+1*75+3*80+3*85+3*90+4*100=1380. Now if I divide that by the 16 values, I get ... wait for it ... 86.25%

So the 86.25%, which is displayed as 86%, is not the real average of the course, but the weighted average of highest scores for some of the students who took the quiz.

Are you starting to see why I said I find those statistics useless?

And why are there only 16 values when I had 27 students who took it?

If I filter by section (none of my students are in multiple sections), then I get 10 for that unique count for each section. This is despite 17 out of 18 students taking it in one section and 10 out of 12 taking it in the other.

Is that 10 meaningful?

Maybe the 10 and 10 represent the number of students who only took one attempt? Nope. I had 15 take it once. I had 6 take it twice and 6 take it three times. There's no logical way to get 10 out of those numbers.

I checked another quiz. Canvas reported that I had 3 students attempt that quiz when I had 29 students take it and there were 68 total attempts. So what is going on? For me, I hoped it had something to do with my use of question groups. I have enough questions that each question sees little usage. Still the question that was delivered the least was delivered 13 times and to more than 3 students.

None of these quizzes had questions that were modified this semester. There was one quiz [neither of the two I'm looking at] where I modified the instructions to remind students not to use Safari while taking the quiz because the images may not show up, but I did not modify the questions on any of them.

There is one optional parameter that you can pass to the API to get the quiz statistics. That is whether to include all_versions. Using all_versions=true or all_versions=false made absolutely no difference in my case.

There is a line in the source code that says the scope are all quiz submissions that are not settings only and have been completed. Maybe there is some strange reason my quizzes aren't getting completed? So I downloaded the quiz submissions through the API and all 27 students (I'm back to my first quiz) had a status of completed and the "kept" score is the average.

Further compounding things is that the tooltip on the graph claims that it is the "Score percentiles chart." That is just wrong. If that's the case, why are all of my students in the 70th percentile or higher? And how do you figure that the average of the top percentiles should count as the average for the course?

As messy as the first answer was (it's appears to be keeping the highest grade but for only some of the students), the second one is straight forward. You don't edit the quiz at all. Go to SpeedGrader for the quiz and look at the top right below the student's name.

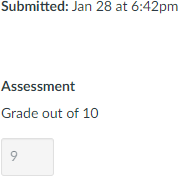

If a student has only taken the quiz once, you will see something like this:

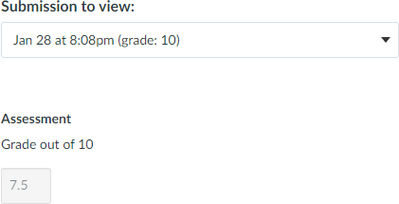

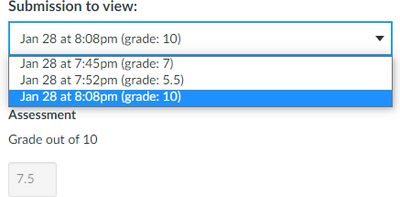

If the student has taken the quiz multiple times, then you will see something like this:

This student's final grade is 7.5. If I click on the drop down, I can see the grades for each of the attempts.

I hope that got you close enough to an answer to know not to use quiz statistics. In fairness, it might be different if all of your questions were multiple choice.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-07-2021

08:40 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-08-2021

03:22 PM

I'm going to have to admit that I don't get the meaning of your Spaceballs GIF, but then I don't do well with buttons that have no text on them, either. Is this you banging your head? If so, I wish I could simplify it. I hope your numbers aren't as jacked up as mine were.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-11-2021

08:27 PM

No, not banging my head at all! After your completely masterful and thorough answer, the only thing I could think of is that you had gone "plaid." Not quite as obvious as just adding some lame Dragon Ball Z Super Saiyan GIF (Lone Star > Goku any day, ammiright?)

I have also never found much benefit in the Canvas quiz statistics, but I at least assumed the averages were accurate. You just mentioning exporting to excel for my own calculation was very helpful.

Community help

Community help

To interact with Panda Bot, our automated chatbot, you need to sign up or log in:

Sign inView our top guides and resources:

Find My Canvas URL Help Logging into Canvas Generate a Pairing Code Canvas Browser and Computer Requirements Change Canvas Notification Settings Submit a Peer Review AssignmentTo interact with Panda Bot, our automated chatbot, you need to sign up or log in:

Sign in

This discussion post is outdated and has been archived. Please use the Community question forums and official documentation for the most current and accurate information.