The Instructure Community will enter a read-only state on November 22, 2025 as we prepare to migrate to our new Community platform in early December. Read our blog post for more info about this change.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

[ARCHIVED] Success Rates for Online Students

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-21-2018

12:58 PM

I am trying to find some national stats about success rates for students in online courses. The most current information I have been able to find is 2014 and is based on California community colleges. Does anyone know where I can find some national stats on this subject? I'm interested to know how our online course success rate stacks up against a national average.

23 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-22-2018

03:04 PM

Hi Andrea, can you go a little further on what success means for your purposes? For example, I'm thinking it could mean...

- Online student grades / outcomes

- Completion / attrition rates in online courses

- Completion rates in fully online programs

Also, would you be looking to compare online to f2f?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-22-2018

04:11 PM

Hi Jared,

I'm looking for all of the above. I've been reading a lot lately about the fact that success rates (grades of A, B, C, and P) in online courses trail behind those same f2f courses by about 10%, but I can't seem to find specific studies for those numbers. I've also seen averages of around 60% success for online and 70% success for f2f, but I think what I've seen has been based mainly on California community colleges from a 2014 study.

I recently read this article in the Chronicle (

https://www.chronicle.com/article/Can-a-Huge-Online-College/244054?cid=cc&utm_source=cc&utm_medium=e...) that talked about the paradox that success rates are lower for online courses, but that students who take at least one online course are more likely to graduate. It made me start looking at those numbers for our institution and trying to find them nationally or even at the state level (I'm in Missouri) to compare. That's proving difficult. I transitioned from a faculty position into Director of Online Learning this spring and am just now really able to get into the weeds with the numbers. I was hoping someone could help point me in the direction of some good studies.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-22-2018

03:12 PM

Not directly related to the question of online versus face-to-face, but I have to give a shoutout to @kona for her tracking student success and completion rates during the process of redesigning her courses with student-centered design strategies. The results are so encouraging! I included her numbers in a post I wrote about her presentation at InstCon:

And you can find out all about her redesign here:

Energize your class with Student-Centered Course Design

To me, those are the most powerful kinds of stats because you can feel more confident about just what is getting compared, even if it is an admittedly limited study. When you start doing campus-wide or system-wide studies, I'm not really sure that what we are measuring is what-we-really-want-to-measure. 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-22-2018

03:41 PM

Thanks for the shout out laurakgibbs! I'll be honest that I haven't really looked at (or for) national retention rates recently. At my College though there is no statistical difference in retention (staying in the course) and success (completing with a C or better grade) between our online and traditional courses. This is something we're pretty proud of and have worked hard to achieve. As for my own online course, I've also implemented some fun/cool things that greatly improved the success of my online stats students. Ex: Before 18% A, 31% B, 48% C --> Last Semester 39% A, 50% B, 12% C. And this was without lowering the standards or rigor of the course. 🙂

Hope you are able to find the information you're looking for!

Kona

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-23-2018

07:36 AM

@kona ,

You should be proud! It sounds like you've done some incredible things, and your success in your online stats look great. Would you be willing to share any of the fun/cool things you've implemented?

Andrea

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-23-2018

07:42 AM

Kona's work is amazing! There is a super-abundance of very specific (and generalizable!) advice in that presentation she did at Instcon 🙂

https://community.canvaslms.com/community/instcon/instructurecon-2018/blog/2018/08/04/instcon-draft

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-23-2018

06:17 PM

Thanks for providing this for everyone Laura!

If anyone has any follow-up questions let me know!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-23-2018

10:11 AM

I second this request! TIA

On Thu, Aug 23, 2018 at 6:36 AM acompton@stchas.edu <instructure@jiveon.com>

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-22-2018

04:34 PM

Hi @acompton

I think that Mark Twain said it best, " There are three kinds of lies: Lies, damned lies and statistics!" Now this might not seem helpful to the questions you are seeking answers for, but it is very important. Statistics provide only a "what", and seldom a "why"! Statistics can also be particularly holistic, lumping all sorts of stuff into one container, then saying that the entire container is flawed. A good example pertinent to your question is that most stats on online persistence, retention and completion lump all online formats together, seldom distinguishing fully online from hybrid, from web-enhanced. At my own school, our hybrid online course out perform f2f courses by an average of 7% - every time, term after term.

Statistics also never examine any cause/effect relationships for discrepancies in persistence, retention and completion. Silly things like: how well are teachers trained to use the tech, how to design effective courses, and how to understand effective online pedagogy? How well are students oriented to the tech, and the differences between online learning and traditional learning. Do students have access to reliable technology (devices and software), and reliable internet access? Is there equivalent learner support between online and f2f learning? All of these factors can contribute to statistical variances that are significant, yet in many respects do not reflect the true effectiveness of online learning when all factors are equal.

Now, @jared has already chimed in to this discussion, and that is a good thing. I don't know if you are familiar with Jared and his role at Instructure, but I can assure you that his focus has always been the human side of tech, and that tech is just a tool to deliver teaching. He is also very current in the literature, so he should be able to help with your lit search.

Kelley

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-23-2018

07:34 AM

Hi @kmeeusen ,

I completely agree about statistics and believe you can make numbers say anything you want, generally speaking. And I also agree wholeheartedly that these studies rarely do a good job of taking into account things like teach-readiness of students, academic preparedness, and motivation for taking online courses in the first place. Unfortunately, for reporting purposes, most reporting bodies ask for numbers and stats, so that is why I'm on this quest as well.

It sounds like you all have the formula right with your online program for sure. I think hybrid is a great option, and I'm hoping to get more of our faculty to consider trying hybrid with some of their current f2f courses. I think they mix of the two modes of delivery give students a great experience. Our online courses currently have a success rate of 73%. That is averaged over the last 3 academic years.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-23-2018

07:41 AM

Rather than looking at delivery modes as being determining factors (I think that is the flaw behind all of these studies; they focus on delivery mode without examining course design), my guess is that you will get the biggest boost from working on the way people are teaching within any delivery mode. Student-centered design is one approach that has enormous power to improve completion rates and other measures of success, and the way you are able to use student-centered design is going to vary based on the delivery mode, the size of the class, the specific learning goals, the characteristics of the students, and on and on. As Kona's experiment shows, the shift to a student-centered design approach can have a big impact, so if you want to raise that 73% completion rate for online, I would suggest that what you would need to do is look at each class and see how it is, or is not, using student-centered course design principles, getting feedback from your students constantly throughout the courses about what they think could improve the experience.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-27-2018

02:39 AM

Hi @acompton , I was just reading your comment and got curious about something. You mentioned your online courses have a success rate of 73%, I'm just curious on how you measure success in this context: Does it signify rate of competition? Student satisfaction? Percent of passing students?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-27-2018

07:24 AM

Hi waaaseee, Our institution define success rate as those students who complete a course with an A, B, C, or Pass grade.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-27-2018

07:33 AM

I see. Thanks!

On Mon, Aug 27, 2018 at 6:25 PM Andrea Compton <instructure@jiveon.com>

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-23-2018

11:39 AM

This is a fascinating question, and I agree with the other comments on how important it is to look for the story behind just bare numbers. I don't know of a national report on this, but I'd be curious to see how individual colleges/states even report this data. For example, many of our faculty teach F2F, online, and hybrid courses, student success data is reported based on the teacher, not necessarily on the mode of delivery of courses. I wonder, for example, do many teachers who have higher student success rates in one mode, struggle in another, or do they tend to be consistently effective across modes? Also, over time, students have learned to shop the online courses they way they have vetted campuses to find the professors or courses they might want to take.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-23-2018

01:45 PM

Those are exactly the kinds of questions that interest me too, d00028799! Although the way I teach in a classroom would obviously be different from the way I teach online, I like to think that my philosophy/practice of teaching would manifest itself in both modes, and my f2f OR hybrid OR online classes would have more in common than one of my online classes would have in common with an online class taught by an instructor who teaches with a different philosophy/practice.

And for what it's worth, in my Orientation Week (happening right now), one of the blog posts the students write is about their initial reaction to the class assignments, hopes, goals, concerns, etc., and from those posts I know that my online class works differently than other online classes they have taken. Which is good: I think we all benefit from a variety of options, and we should make it easier for students to learn more about the different kinds of courses and what they can expect. They deserve something better than RateMyProfessors.com to make those choices, ha ha. But it's better than nothing I guess. 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-23-2018

01:49 PM

P.S. Since I use an open blog network for that, you can see the students' posts:

One of my all-purpose course design mantras is ASK THE STUDENTS. I always learn unexpected things from their open-ended observations. 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-24-2018

11:00 AM

laurakgibbs I definitely agree that this would be some better evaluative information for students when choosing the types of classes they want to take over RateMyProfessors.com. 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-24-2018

11:05 AM

d00028799, I've actually asked our IR department to give me that information about all three modalities. We collect the information for success rates for individual faculty, but the institution also aggregates that information institutionally as well. I'm curious to see the numbers for all three delivery modes and am hoping to get those soon. The national rates show there is generally about a 10-12% difference between online and f2f success rates, and hybrid isn't always included. I agree with everyone's comments, though, that both design and student preparedness and environmental factors play largely in success and don't factor into these stats.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-24-2018

11:09 AM

Good plan Andrea!

We've done this at our campus, and it's important to look at a variety of criteria when configuring what success includes - including repeats, opportunity gaps, large class size, etc.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-23-2018

05:50 PM

Good resources: Online Learning Consortium and studies by Allen and Seaman (Babson Survey Research Group). My sense is that there are so many variables it is difficult to arrive at an accurate number in such a comparison. Are we comparing the exact same F2F course/same outcomes with Online version? To laurakgibbs point, what about the design?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-27-2018

10:20 AM

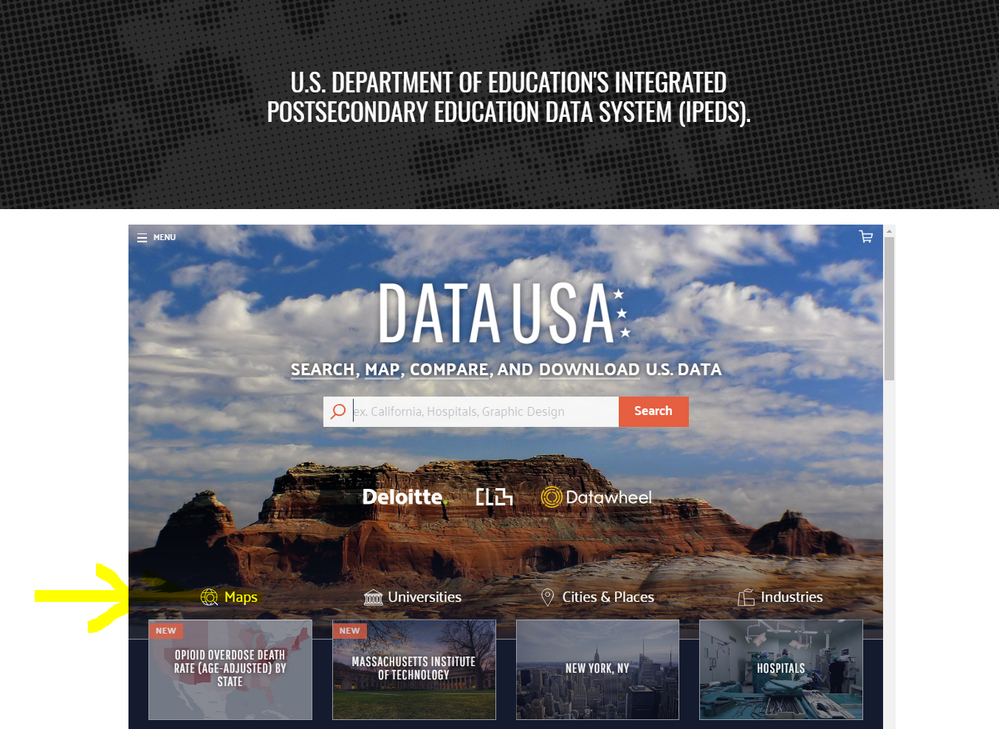

Some of you may or may not know about the US Department of Education's Integrated Postsecondary Education Data System (IPEDS). Here is a link from one of our faculty support pages our college provides for our faculty (PACS-IPEDS).

Look for the category "Maps"

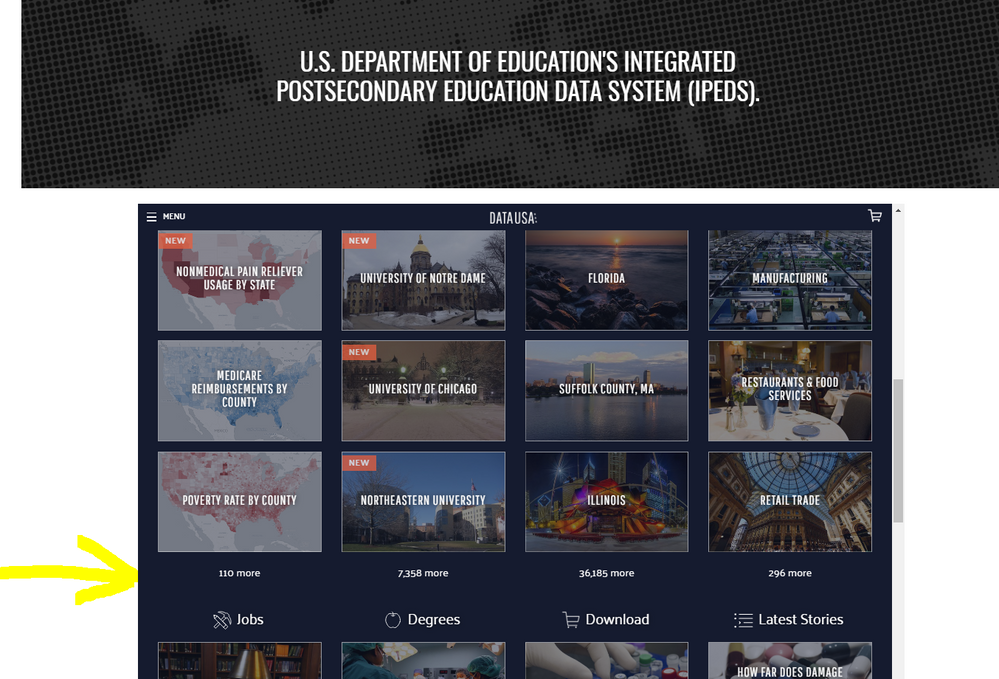

Scroll down until you see "110 more"

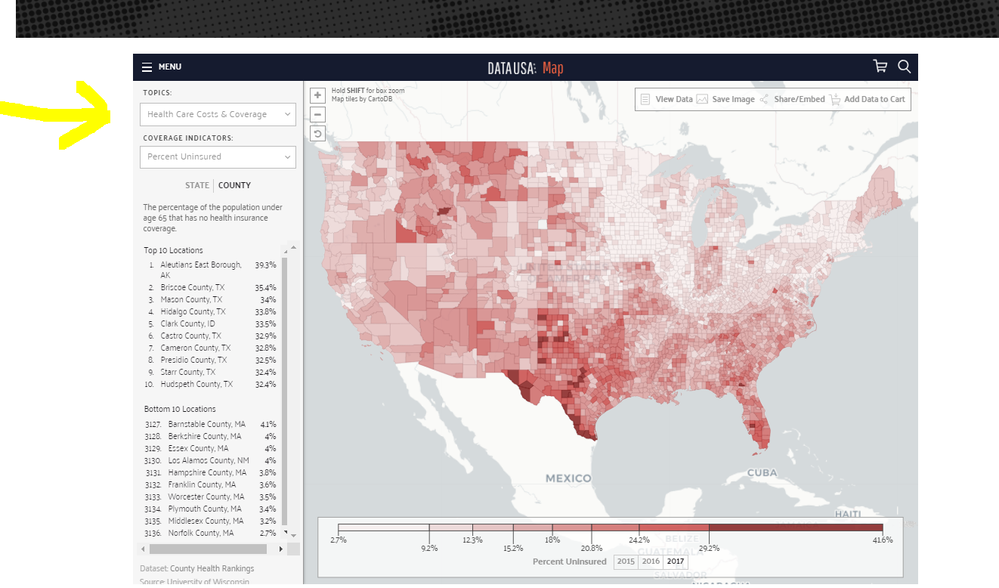

Under "topics" change it to "Education".

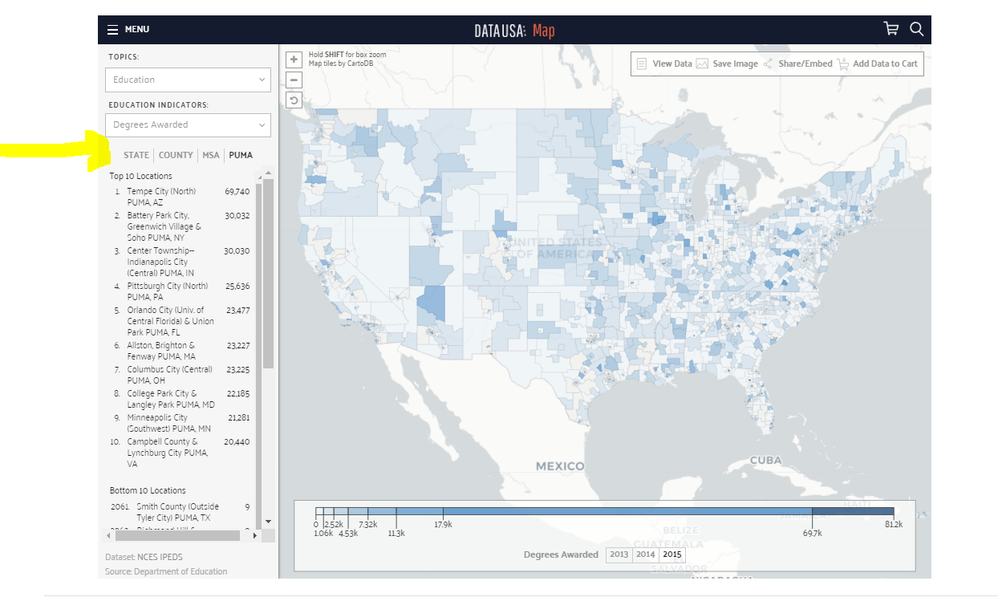

Change "Education Indicators" to "Degree Awarded"

You can also select by "State", "County", "MSA" (Metro Area), and "PUMA" (Public University Metro Area)

I hope this helps.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-05-2018

04:34 PM

Note: I edited this post extensively after my first draft -- in trying to keep it readable and jargon-free, I ended up misrepresenting a research concept and the importance of certain studies' results.

There is some great discussion and commentary on this thread. I'll try not to be redundant or simply answer the question with, "It depends".

However... it depends ![]() There is a lot of nuance in a comparison of student outcomes and student persistence in online vs face-to-face courses and programs. A lot of that nuance is lost in aggregate analyses that can not control certain variables. For those reasons, some people believe a straight, comparison across modes in aggregate is not appropriate. Some people believe any such comparison tends to focus us on the wrong things. I don't disagree.

There is a lot of nuance in a comparison of student outcomes and student persistence in online vs face-to-face courses and programs. A lot of that nuance is lost in aggregate analyses that can not control certain variables. For those reasons, some people believe a straight, comparison across modes in aggregate is not appropriate. Some people believe any such comparison tends to focus us on the wrong things. I don't disagree.

Still, if you decide you want a straight comparison of online vs f2f for learning outcomes or for persistence rates I think it's worth pointing out two big pitfalls re. variables:

1. Different instructional methods -- i.e. the teaching or learning method, materials, or assessments in one course are not exactly the same as the other. If this were true one would expect to see no significant difference in learning outcomes. This plays out in the classic book by T.L. Russell, (surprise) The No Significant Difference Phenomenon, and is generally summarized by the position Richard Clark took in the "media debate" of the '80s and '90s -- i.e. "[...] media are mere vehicles that deliver instruction but do not influence student achievement any more than the truck that delivers our groceries causes changes in our nutrition".

If we are trying to compare online vs f2f, then presumably mode of delivery is our independent variable, and we control the other variables -- like instructional method -- as much as possible. If we don't, things tend to fall apart. One recent example of this that sticks in my mind is from a small 2014 study by Caldwell-Harris, Goodwin, Chu, & Dahlen which compared f2f and video courses and found that students in the f2f did *better* on the test than those students who watched recorded videos of the exact same lecture. The exact same lecture. What's somewhat hidden is that in the live, f2f setting, the teacher interacted with students. Even just eye contact and physically approaching students may have been enough of a difference in treatment, imo, to explain the difference in scores.

2. Different students / samples -- sometimes students in one course are not comparable to students in another course due to different levels of background knowledge, ability, engagement, motivation, financial stability, etc. This is hard to control, as we can't deprive a student of their choice in whether they take a course online or f2f. Indeed, student choice in mode is part of what makes online and blended learning so powerful.

Sample differences may explain findings like this study from 2018, which found, "Students that persist in an online introductory Physics class are more likely to achieve an A than in other modes. However, the withdrawal rate is higher from online Physics courses."

Weird, right?

I haven't dug into it, but I'd expect to see those factors in play in the study you mention re. California Community Colleges that found overall students who took online courses performed less well. These aggregate studies rarely compare one course to another with the same That doesn't mean those results are wrong, but they do no more likely they point to deficits in the design of online courses, e.g. some say it's easier to deliver a bad online course than a bad face-to-face course because at least f2f requires that both teacher and students to show up.

Re. persistence, you note the 2013 study, Does online learning impede degree completion? found, "that controlling for relevant background characteristics; students who take some of their early courses online or at a distance have a significantly better chance of attaining a community college credential than do their classroom only counterparts".

Those aside, I am aware of a couple of resources that may help you:

- In 2009 and 2013, researchers published a meta-analyses of research studies that compared courses taught online vs blended vs face-to-face primarily in terms of student outcomes.

- Oregon State University has a very cool Online Efficacy Research Database that is filterable by mode of delivery.

Good luck!

Community help

Community help

To interact with Panda Bot, our automated chatbot, you need to sign up or log in:

Sign inView our top guides and resources:

Find My Canvas URL Help Logging into Canvas Generate a Pairing Code Canvas Browser and Computer Requirements Change Canvas Notification Settings Submit a Peer Review AssignmentTo interact with Panda Bot, our automated chatbot, you need to sign up or log in:

Sign in

This discussion post is outdated and has been archived. Please use the Community question forums and official documentation for the most current and accurate information.