API Rate Limiting

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Last year we updated our API rate limit policy. Since then I've been asked quite a few questions about how the rate limits work and heard quite a few schools concerned their applications will be limited.

tl;dr The API is rate limited based on the API token (not the account, instance, or user). Exceeding rate limits will result in a 403 Forbidden (Rate Limit Exceeded) error. API calls made sequentially shouldn't be an issue. However, you may run into issues if you're running concurrent API requests with the same token.

Terms:

| Cost | CPU time + database time (in seconds) |

| High Water Mark | Size of the "bucket", this is the allowable maximum of un-leaked cost (default is 700 as of this writing) |

| Leak | Rate at which cost is subtracted from the bucket (outflow * timespan) |

| Outflow | Rate of bucket cost reduction (currently 10 units) |

| Timespan | Time in seconds between the last check to the current check |

| Bucket cost | Aggregate of un-leaked cost subtracted from the high water mark (remaining cost available in the bucket) |

General

Canvas monitors API requests to prevent abuse and/or excessive use. We do this to ensure that the rest of the environment and other schools are not negatively impacted by a single user or application. Canvas limits are imposed at the token level, so it’s helpful to create a separate token for each application that has a significantly different purpose.

So how does Canvas determine rate limits?

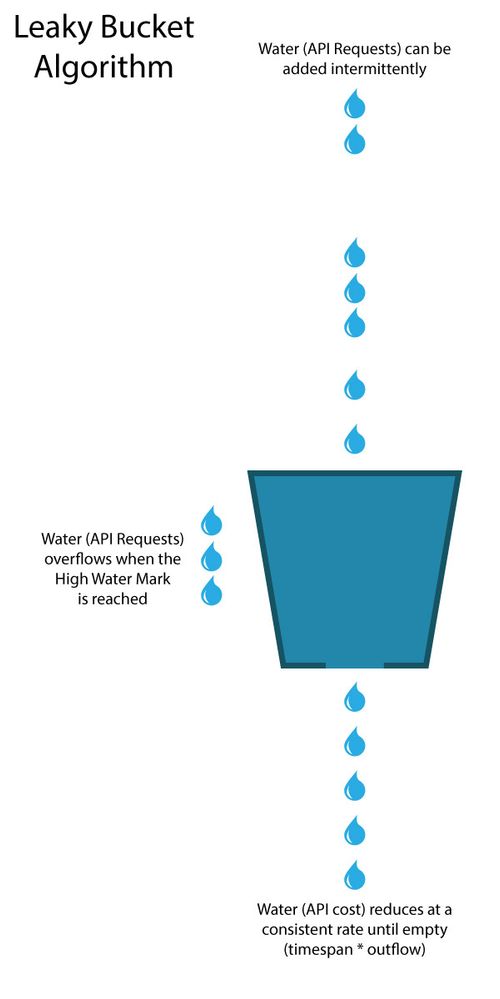

Canvas employs a leaky bucket algorithm. Imagine a bucket with a hole in the bottom. You continually add water to the bucket and it trickles out of the hole in the bottom at a consistent rate. If you were to increase the inflow rate faster than the hole was able to drain the water you'd eventually fill the bucket. The top of the bucket is called a high water mark. When you reach your high water mark the water will overflow the sides of the bucket. The overflow would represent rate limited API calls.

In the leaky bucket algorithm the water represents the cost of API calls. Some API calls cost more than others. This is akin to larger water droplets. For example, a GET call to retrieve your user information will cost less than a POST call to create a new user. The cost of any given API request is determined as the CPU time (in seconds) plus the database time (in seconds).

When you initially create a request Canvas adds 50 units cost up front. After the true cost of the call is determined the 50 units cost is then subtracted. This is done to prevent multiple simultaneous requests from overflowing the bucket before Canvas can calculate the cost. Sending multiple simultaneous calls will quickly fill the bucket regardless of the true cost of each call (until the upfront cost is removed).

As more and more requests are made the bucket will begin to fill. Once the bucket cost exceeds the high water mark you will receive a 403 Forbidden (Rate Limit Exceeded).

How does the bucket "leak"?

The bucket will leak at the rate of outflow * timespan. The outflow defaults to 10 units (but is subject to change). The timespan is a representation of time in seconds between the last check and the current check.

When a new API request is made Canvas will determine the bucket cost, the leak, and the request of the current call. It will then increment the bucket cost by the cost of the request minus the leak (e.g. Bucket Cost (HWM - sum of previous API cost) - Request Cost + Leak).

This means that a completely full bucket (assuming High Water Mark as of this writing of 700 units) could be completely emptied within 70 seconds.

This also means that running processes sequentially will result in no rate limiting. The bucket will leak faster than it's possible to fill (again assuming the same default settings as of this writing). Depending on the requests it's possible you could run some parallel processes without hitting your limit as well. This will vary from request to request but you probably wouldn't be able to a large number of parallel requests. Particularly when you account for the upfront cost.

How do I keep track all of this?

Canvas will include several parameters in the header of the response to your request. The field name X-Rate-Limit-Remaining is pretty self explanatory, it's the amount of cost available in the bucket. So a low number in this field can be cause for concern. You'll also receive X-Request-Cost which is the cost of the current call. So if you do need to run requests in parallel you can use this to determine the rate at which these requests will run.

I'm pretty sure I'm going to hit my rate limit, what are my options?

We've got a couple options in place to ensure you're able to effectively run your applications.

If you're running API calls on behalf of users developer tokens are a great option for you. For example, you've developed an application for students to submit an assignment. This is a great case for developer tokens; which allow you to programmatically generate individual access tokens for users. So when a user logs into your application you can generate a token for that individual user to submit their assignment. At the same time another student can login and generate their access token. Because we rate limit based on token these two students won't interrupt each other from submitting their assignment.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.