District Gradebook Submissions Report via API

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

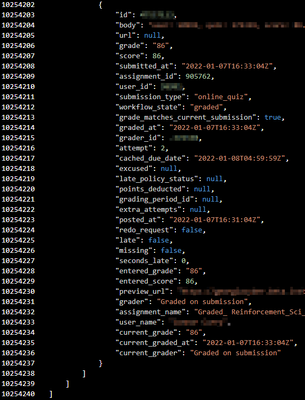

As a fully virtual school for 12,500 students across grades K-12, a part of our "compliance" grade is determining

- how many assignments has the student been assigned per course

- how many per assignment group per course

- out of __ assignments, ___ were completed with a (%) value provided

- what was their individualized (cached) due date

- was the assignment complete

- if so, was it completed late?

In other words, has the student been compliant in completing assignments?

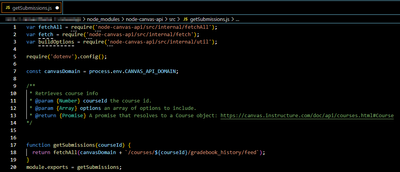

The GET Submissions API call returns all the data needed using:

- I am a total noob to coding and API calls. Am taking a course to learn but at the moment, I know enough on my own to be dangerous 😂

- Using JavaScript and Visual Studio Code. Started out in Postman but could not seem to get the call to work right there.

- Image of sample submissions file attached

- Working with @ChrisPonzio

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'll attempt to address the original issue, although Canvas Data is another way to go. I gather all of this information (and more) nightly in about 10 minutes, but we only have 400-500 courses. I archive the data locally so I only have to fetch the new stuff each night and it goes a lot faster.

There are two or three potential issues I see.

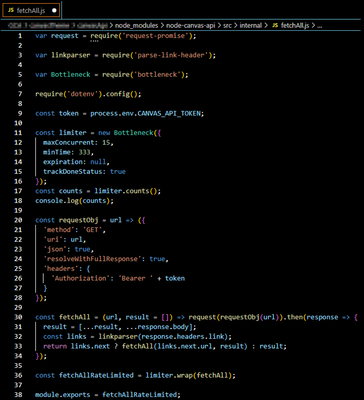

I'm not sure what fetchAll is, but the name makes it sound like it is expecting an array rather than a single URL. fetch() is what is used for a single URL. On the other hand, it might take a single request and then watch the link headers to see what else needs fetched. It's hard to say, so this isn't my major concern; just that it's hard to know since fetchAll() isn't a built in JavaScript command. There are also some bad fetchAll() codes out there that blindly increase the page number without checking the link headers.

Each fetch() returns a promise. If you don't treat them as such, your program tends to finish before the API calls are made.

Watch out for throttling. You're limited to how hard you can hit the API at one time. There is an x-rate-limit-remaining header returned with each request and if it gets down to 0, then your requests will fail. The throttling algorithm is set up so that if you hit it with all the requests at one time, it will fail rather quickly.

What I do is incorporate a throttling library (I use Bottleneck for JavaScript). I load node-fetch and then wrap it inside a Bottleneck limiter. Depending on the call I'm making, I may limit it to 10 concurrent API calls with a minimum between each one of 100 ms or I might go up to 30 with a minimum delay of 25 ms. Getting the submissions may be intensive, so I would use a non-aggressive rate and then be prepared to wait. Slow and steady wins the race.

When I fetch the submissions, I use a maxConcurrent=100 and minTime=50 when I am fetching only the recent submissions (using the graded_since or submitted_since parameters) and maxConcurrent=30 and minTime=250 if I'm doing a complete fetch. I strongly recommend using the _since parameters to speed up the process.

As for the promises, I start with an array, then add the fetch commands to the array and use return Promise.all() on the array. It could be a little bit more robust -- I don't do a lot of checking for failed requests, but thankfully they don't happen that often.

If you are putting 1400 requests into the array at a time, you may run into some other issues. I've had node fail with a request that makes it look like it cannot find the hostname, but other people suggest it's putting in too many requests at once. It really only seems to happen to me when I'm using my Windows machine (I have three ISPs at home and some are a little flakier than others. If I run it from my server, which is set to always use the same ISP, it seems to act better).

What I might do if I had that many is to implement two Bottleneck limiters. I would feed one the course IDs and limit it to a few at a time. There would be no fetch directly inside of those. But each of those would then call the fetchAll() routine you have to actually get the data. That way, the queue most likely to fail (the Canvas API calls) aren't getting hit too heavily and you can get all of the data for a class before moving on. Right now, my routines fetch the first submissions API call and then mix the fetch() for all of the pagination requests in with those. If something fails, it still continues to make all the calls, despite it not being able to process them.