The Instructure Community will enter a read-only state on November 22, 2025 as we prepare to migrate to our new Community platform in early December.

Read our blog post for more info about this change.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

- Community

- Groups

- Data and Analytics Group

- Forum

- CD2 Table not initializing via dap

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Found this content helpful? Log in or sign up to leave a like!

CD2 Table not initializing via dap

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-05-2023

12:43 PM

I am running the following command using the dap client version 0.3.14 and for this specific table (submission_versions) it only downloads 5-6 files then just dies - no error is returned (see attached abbreviated log).

dap --loglevel debug initdb --namespace canvas --table submission_versions

Has anyone seen this that can provide some help in troubleshooting this? I actually saw this intermittently with a few other tables, which finally seem to have resolved on their own for some unknown reason, but this last table has not ever worked... thinking it may be large - but wondering why it only gets 5-6 of the 25 pieces downloaded. Based on the last line that is output before it dies I am guessing it may be a Postgres db issue but I am not sure? I am syncing to an Azure managed PostgreSQL instance from an Azure VM, and have verified that there is adequate physical disk space available on the database but beyond that I am new to PostgreSQL.

I am also curious since there are ~90 tables included with CD2 if people would be willing to post which tables they are most frequently using to help limit scope if possible as we get up to speed!

Thank you in advance!!

14 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-09-2023

06:46 AM

We see a similar outcome when trying to pull a large table (13 file chunks in our case). The error seems to imply the data isn't successfully received or that an issue happened in uncompressing (maybe). The only reason I mention it is that it also happens at around the 5-6 piece mark.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-09-2023

11:55 AM

We've been running into issues with DAP too. One of our devs reported an error to our CSM that she gets when running the DAP initialize method for content_tags table (under canvas namespace). She said she can successfully run initialize command to create other tables, and so assumes this must be something with the content_tags table’s data. Like one record has bad content_id? Our CSM said that Instructure team needed to do more troubleshooting. She asked our dev to email our error message (you might want to send your log) and the CLI version we’re on to canvasdatahelp@instructure.com

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-13-2023

09:59 AM

Thank you so much! I just sent an email to them to see what they can come up with!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-24-2023

07:08 AM

I am experiencing the same issue with content_tags. The dap initdb command fails only for that table. Every other table was initialized without issue.

I generated a debug log and only two lines reference an error:

1) 2023-10-24 08:45:00,620 - ERROR - 'content_id'

2) KeyError: 'content_id'

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-25-2023

06:33 AM

Yup, we get the content_tags id issue too. I guess perhaps there's a schema or data model problem.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-14-2023

12:09 AM

Same here.

We use the latest version of 'instructure-dap-client 0.3.16'. When the code calls the 'content_tags', it shows error as below and the process has been stopped.

=====================================================================================================

Traceback (most recent call last):

File "/home/adm1/cd2/script/init_cd2db_ind_table.py", line 25, in <module>

asyncio.run(main());

File "/usr/lib/python3.10/asyncio/runners.py", line 44, in run

return loop.run_until_complete(main)

File "/usr/lib/python3.10/asyncio/base_events.py", line 649, in run_until_complete

return future.result()

File "/home/adm1/cd2/script/init_cd2db_ind_table.py", line 21, in main

await SQLReplicator(session, db_connection).initialize("canvas", "content_tags")

File "/home/adm1/cd2/lib/python3.10/site-packages/dap/replicator/sql.py", line 67, in initialize

await client.download(

File "/home/adm1/cd2/lib/python3.10/site-packages/dap/downloader.py", line 83, in download

await wait_n(

File "/home/adm1/cd2/lib/python3.10/site-packages/dap/concurrency.py", line 49, in wait_n

raise exc

File "/home/adm1/cd2/lib/python3.10/site-packages/dap/downloader.py", line 81, in logged_download_and_save

await self._download(db_lock, context_aware_object, processor=processor)

File "/home/adm1/cd2/lib/python3.10/site-packages/dap/downloader.py", line 113, in _download

await processor.process(obj, records)

File "/home/adm1/cd2/lib/python3.10/site-packages/dap/integration/base_processor.py", line 19, in process

await self.process_impl(obj, self._convert(records))

File "/home/adm1/cd2/lib/python3.10/site-packages/dap/plugins/postgres/init_processor.py", line 63, in process_impl

await self._db_connection.execute(

File "/home/adm1/cd2/lib/python3.10/site-packages/dap/integration/base_connection.py", line 41, in execute

return await query(self._raw_connection)

File "/home/adm1/cd2/lib/python3.10/site-packages/dap/plugins/postgres/queries.py", line 26, in __call__

return await self._query_func(asyncpg_conn)

File "/home/adm1/cd2/lib/python3.10/site-packages/asyncpg/connection.py", line 1081, in copy_records_to_table

return await self._protocol.copy_in(

File "asyncpg/protocol/protocol.pyx", line 561, in copy_in

File "asyncpg/protocol/protocol.pyx", line 478, in asyncpg.protocol.protocol.BaseProtocol.copy_in

File "/home/adm1/cd2/lib/python3.10/site-packages/dap/plugins/postgres/init_processor.py", line 81, in _convert_records

yield tuple(converter(record) for converter in self._converters)

File "/home/adm1/cd2/lib/python3.10/site-packages/dap/plugins/postgres/init_processor.py", line 81, in <genexpr>

yield tuple(converter(record) for converter in self._converters)

File "/home/adm1/cd2/lib/python3.10/site-packages/dap/conversion_common_json.py", line 82, in <lambda>

return lambda record_json: record_json["value"][column_name]

KeyError: 'content_id'

==================================================================================================

According to 'lib/python3.10/site-packages/dap/conversion_common_json.py', line 82 is checking type_cast is None.

if type_cast is None:

return lambda record_json: record_json["value"][column_name]

Anyone has idea about this?

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-14-2023

08:46 AM

FWIW I think the Schema for the content_tags table has/will be getting updated. (Maybe it was updated on 13-NOV-2023, but I haven't directly verified). The content_id column was marked as required in the schema, but we have multiple records in the data that are lacking that field.

L

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-15-2023

07:14 AM

Looks like something didn't go as planned, so the schema update was delayed. Sorry to have caused confusion.

As far as I can tell it's still not available. 😞

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-28-2023

06:55 AM

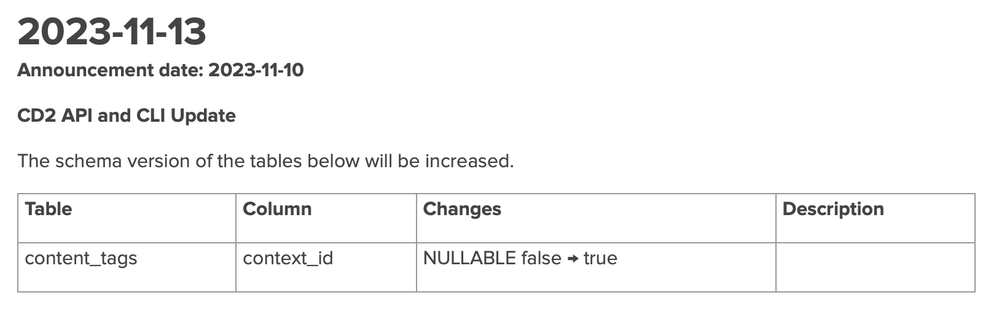

Do you mean the context_id column? According to the 2023-11-13 release notes, the content_tags.context_id column was supposed to be changed to be nullable, but the schema still shows it as required as of today.

@LeventeHunyadi @Edina_Tipter do you know if that 2023-11-13 change to the content_tags schema was actually released?

--Colin

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-30-2023

08:59 AM

Looking at the JSON schema of the content_tags table in the namespace canvas, the column context_id is required but the column content_id is optional. I have retrieved the schema with the command

python3 -m dap schema --namespace canvas --table content_tags

and checked the property required within the sub-tree for value. The property required lists context_id but not content_id.

I have found the same when checking the Python class definitions where the exposed table structure is defined internally. Sadly, the dataset documentation for the table content_tags was not showing earlier whether a column is required or optional but this has been fixed.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-01-2023

06:33 AM

Right -- but the API and CLI Changelog says that content_tags.context_id should have been changed to nullable on 11/13. Is the changelog wrong?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-01-2023

12:57 PM

I am afraid it very much seems so that the change-log has a typo.

Looking at the source code, content_tags.content_id was recently changed to optional (nullable). In contrast, content_tags.context_id has remained required (non-nullable). When I check the Canvas database schema from which this data is replicated, I see the same: content_id is optional and context_id is required.

I have reached out to the team to update the change-log. Thanks for pointing this out!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-18-2023

09:26 AM

I am having issues with content_tags, discussion_entry_participants, submission_versions, and content_participations download using DAP INITDB commands using a FOR LOOP code of the LIST of tables.

Then when I manually ran INITDB of these tables one-by-one, I am successfully able to download content_patricipations.

I have been doing SYNCDB since then.

I wanted to test this for one week and see if I face any more issues.

Like others noted, the output just hangs after certain number of pieces in the download (like others mentioned).

I have sent email to support team and waiting for their response/resolution.

Has anyone tried WEB_LOGS download using any DAP commands, any suggestions how I can do it?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-24-2023

07:44 AM

$ dap initdb --namespace canvas_logs --table web_logs

Community help

Community help

To interact with Panda Bot, our automated chatbot, you need to sign up or log in:

Sign inView our top guides and resources:

Find My Canvas URL Help Logging into Canvas Generate a Pairing Code Canvas Browser and Computer Requirements Change Canvas Notification Settings Submit a Peer Review AssignmentTo interact with Panda Bot, our automated chatbot, you need to sign up or log in:

Sign in