Getting a small, reusable library totally makes sense in your case. Our custom JavaScript only has the dashboard course card sorter in it, so it wouldn't make sense to create an entire library for that, but I'm still using jQuery (I think) because it relies on jQuery UI and that part won't work without jQuery.

I think I might have read that article already ... and decided that for me it was worth it since fetch() was built into the browsers and was easier than xhr() stuff. Then, when I switched to Node, I found the node-fetch library that allowed me to use fetch() so I didn't have to learn something else.

The code I wrote for this project has those helper functions that allow me to reuse things. For example, here's my function to get the assignment groups.

function getAssignmentGroups(courseId) {

let query = variableSubstitution('/api/v1/courses/:course_id/assignment_groups', {

'course_id': courseId,

'include[]': 'assignments',

'exclude_response_fields[]': ['rubric', 'description'],

'override_assignment_dates': false

});

return getAPI(query);

}

Obviously, that could be one statement. The stringification isn't what I would like -- I'm not jusing JSON although I probably should -- I'm using the query string. I would have just liked to had exclude_response_fields without the [] at the end, but the function I'm using to convert it for me doesn't add the [] if field is an array just numeric keys.

The variableSubstitution helper function isn't really necessary because I built it into the getAPI() function. It looks for :variable_names in the path and replaces them and removes it from the parameter list. Then it sends the rest of the parameters on to the getAPI() function.

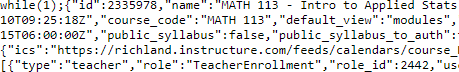

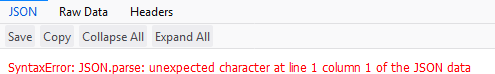

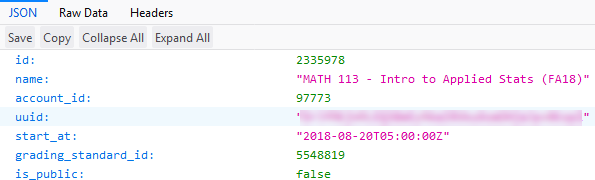

The getAPI() function is the one that adds the headers. This particular program only needs the GET method since I'm basically building my own Canvas Data for certain tables, but with current information. Once it's prepared the url, it passes it off to another function that takes either a string or an array of strings and calls the API for each of them. That's the bottleneck portion. It also intelligently? handles recursion, going ahead and generating all of the urls for links with a page=\d+ format and one at a time for page=bookmark format. If I'm running multiple requests, the first one wasn't necessary, but if I'm testing with just a single course, it sure came in handy.

I actually used .forEach() today. It made it a lot easier -- so thank you for sharing that one and insisting on using it when I wanted to for() it. I can't break out of it, but I don't need to, either. I was originally using .map() by my linter was complaining about needing to return something and so I looked up the difference and decided that I really wanted the .forEach() in every case except one. I think I can do away with that one, too, I'm doing a promise when I don't need to now that I'm not using the recursion with promises anymore. The code is turning into hackish slop, but it's getting done and sometimes the time constraint is more important than the elegance factor. I also modified the bottleneck's maxConcurrency issues and split my fetches up into three parts. First I grab enrollments so I have current course, section, and user information. Then I fetch all the assignment groups to get the assignments. Finally, I get all the submissions. The first two could handle 40-50 concurrent sessions, but I backed down to 25 for the last one. It could probably handle more, but I had API calls taking 15 seconds to find out that there was a 104 question quiz that was part of that history.

I still don't have my error checking down, but it ran today and downloaded all of the data over a 13 minute period. Now I have to write the code to put it into the CSV format needed by Starfish. Lots of design questions there like should we exclude 0's from the class average or report the median instead, but we won't really know what we want until we have it running and find out that it doesn't work the way we want it to.

Regarding your code: in your pb.ajax.js, are you going to do different things for stati (statuses?) of 400-422?

I would throw a 'use strict' in there, unless it's already there at a higher level in the same file.