The Instructure Community will enter a read-only state on November 22, 2025 as we prepare to migrate to our new Community platform in early December. Read our blog post for more info about this change.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

[ARCHIVED] Canvas Data 2 - Ideas and Feedback

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-05-2019

12:20 PM

The invaluable feedback and ideas that many of you have provided to inform the foundation of Canvas 2 is greatly appreciated. We’d now like to do a quick check-in to see how things are working for you and what new ideas you have.

Current Canvas Data Feedback

How are the current feature sets of Canvas Data working for you? Help us understand more by answering the following questions for this discussion.

- What is working well?

- What is not working well?

New Ideas

If you have a new idea please visit the Ideas page of the Community. Begin by searching for an existing idea like your own, if you do not find one please submit your idea as new.

It is most helpful if you include the following information in your new idea.

- What is the problem you are trying to solve?

- How will the solution benefit teaching and learning?

Thank you!

Canvas Data Team

19 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-05-2019

01:50 PM

Hi Gayatri,

Thanks for starting this discussion. When you say "current feature sets" are you discussing the flat file download process or the actual data sets (tables and fields).

Thanks!

Joni

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-05-2019

02:11 PM

Hi Joni,

Yes, you are correct. The flat file download process and data sets, both.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-11-2019

09:50 AM

Hi Gayatri:

Regarding file delivery, I know you've spoken to me and many other institutions and everyone is asking for incremental, more frequent updates.

The only other improvement would be to have a way to check to see if the data set is historical. Right now there isn't a good way to do that so I check to see if there is a user_dim file and if there is, assume it's historical and skip it. If you are providing incremental, more frequent updates, it would be important to be able to identify this, via an api call prior to downloading it so that you can either download those files or not.

As far as data sets, here are some feature ideas that are currently in the community related to additional data to be included.

These have been posted by me:

https://community.canvaslms.com/ideas/11920-canvas-data-quizzesnext

These were posted by other people:

Thank you so much for reaching out to the community for feedback on improving Canvas Data.

Joni

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-11-2019

11:31 AM

Hi Joni,

Thank you for the detailed note. There will definitely be an easy way to identify the batch as Historical or Delta. Plus, we'll be versioning the files individually.

I am currently analyzing the existing data sets and will make sure to go through each of our above posts.

Warm Regards,

Gayatri

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-11-2019

06:33 PM

Hi Joni,

Not sure what you mean about the user_dim file, we get this file every day, (i.e. on each sync).

The only way I've found to check if the dump is an historical requests dump is to check the number of files in the dump. This can be seen through the Canvas Admin data portal, or using the canvasDataCli list procedure. We get 95 files every time we sync, but the historical requests dump typically contains only 20 or so. I've included a check in my download script to stop the download for manual intervention if the number of files reported in the three most recent dumps is less than 80.

From the documentation the Canvas Data Loader tool deals with these periodic dumps, not sure how. We can't use that because it doesn't support Oracle.

Regards,

Stuart.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-11-2019

06:40 PM

Does your 20 or so files during a historical dump contain a user_dim file in it? I think Joni was saying that she used the absence of a user_dim as her check for historical dumps, rather than relying on the count being less than 80.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-11-2019

08:20 PM

I think after listening to all three of you I ended up reducing the list of tables in the dump to check and see if it's == "requests". Any other dump is currently reduced to a list of 100 tables for us, everything but catalog_, which is 117 at full count. I think. Canvas has released new tables twice this year, so counting isn't portable. Joni's test is pretty solid too, because it's doubtful that CD would populate without a user_dim, but I believe I've seen questions here that indicate people can start with a small number of the total tables. I'd except it correlates to what's being used in the instance.

I think a decent solution which satisfies the request would be adding a boolean to the dump data from the API. I would like this too. This would give us enough information to either skip the download or skip and check back for the daily, or check if the previous dump was the daily.

{

"accountId": "26~123456",

"expires": 1559702797680,

"updatedAt": "2019-04-06T02:46:41.534Z",

"sequence": 1259,

"schemaVersion": "4.2.3",

"numFiles": 104,

"createdAt": "2019-04-06T02:46:37.680Z",

"finished": true,

// "requestsHistorical": true,

"dumpId": "asdf-asdf-asdf-asdf-asdf"

}, {

"accountId": "26~123456",

"expires": 1559687662093,

"updatedAt": "2019-04-05T22:34:25.274Z",

"sequence": 1258,

"schemaVersion": "4.2.3",

"numFiles": 100,

"createdAt": "2019-04-05T22:34:22.093Z",

"finished": true,

// "requestsHistorical": false,

"dumpId": "1234-1234-1234-1234-1234"

}- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-11-2019

10:00 PM

Hi James,

I see what you mean. I stop the sync before the file download so I don't see what files it includes. The manual response is to sync the files then use list/grab to get the data for the day the historical dump is published, then set up to run as usual from the following day onward.

Regards,

Stuart.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-12-2019

06:37 AM

James is correct. Our regular, daily data files have a count of 93. Historical has 117. At the time I wrote my code, there were about 75-80 files in each and it was very hard to determine which was historical simply by looking at the number of files. user_dim is always going to exist in a daily data dump but never in a historical requests dump.

My script to check for new data files goes through this process:

- Use Canvas Data API to check to see if the most current dump_id is the next # in the sequence.

- If it is new, check the artifactsbytable to see if user_dim exists

- If user_dim exists, I know it's a regular daily data dump and I should download it.

My concern was that having incremental updates for all tables would mean that I would be unable to determine historical vs. not using this method but Gayatri says there will be an easier way of determining this. ![]()

Joni

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-13-2019

05:43 PM

Hi Joni,

It sounds as though your requests historical dumps may be different from ours. Initially the dumps included a full history, so the only way to use it was to completely reload requests data. Some time ago, not sure exactly when, the dumps only included requests data since the last historical dump. This is presumably why the file count is relatively low and relatively stable.

Regards,

Stuart.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-24-2019

09:47 AM

Stuart,

If you grab just a specific data dump by dump_id, it will only grab all of the files along with the incremental requests for that dump's time period.

The problem is on the days that there is a historical requests dump (every two months), I was unable to differentiate which was the historic requests data dump and which was our regular data dump. This is still the case which is why I look to see if user_dim exists to verify that it's not requests. I do not have the disk space to download requests, especially not a historical data dump.

We have been on canvas since November 2014, and we are not a small college and have a lot of canvas usage, so there is a ton of data.

If you don't want to reload your requests data, ignore the historical data dump and just use the incremental requests from the non-historical data dumps.

Hope that helps.

Joni

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-02-2019

11:44 PM

Hi Joni,

Thanks for the response. I do use the list / grab functionality when necessary, (e.g. following an historical requests dump), but the drawback of using it routinely is that it drops all of the gzip files into a single directory. In order to use the unpack operation you would then need to distribute the gzip files into their table directories.

It would be much simpler if the requests*.gz files downloaded by the sync were named the same way as they are by the grab. The files downloaded by a grab operation have a prefix which identifies the dump the come from.

Regards,

Stuart.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-13-2019

09:06 AM

I posted a suggestion to sort the data tables before export to Ideas. Unlike differential imports (which is also valuable), sorting the data before splitting into million-row chunks would require very little effort, for a moderate increase in the ease-of-use of the data portal.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-24-2019

09:40 AM

Yes, I sort by the id field before inserting into the database. It speeds up the inserts immensely.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-04-2019

05:01 PM

I'd like to see better data for reporting external tool utilization from Canvas Data. We need to be able to report on how many courses are using various LTI tools. The external_tool_activation_dim is useful for reporting on tools activated in individual courses. But, when a tool is enabled for a (sub)account rather than a course, it's not easy to report on how many courses actually have the tool enabled.

There's an existing idea here: https://community.canvaslms.com/ideas/13839-canvas-data-include-externaltoolid-in-moduleitemdim-tabl...

And there's a workaround to get the data via the API here: Using Canvas API to find external tool usage

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-18-2019

03:11 PM

Thank you, Craig. I have made a note of this.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-02-2019

11:38 AM

I've previously mentioned the need for access to the rubric line scores so these can be aggregated for academic assessment & accreditation purposes. In many cases the aggregated scores of individual rubric criterion are useful with this reporting while the full submission_fact.score is not.

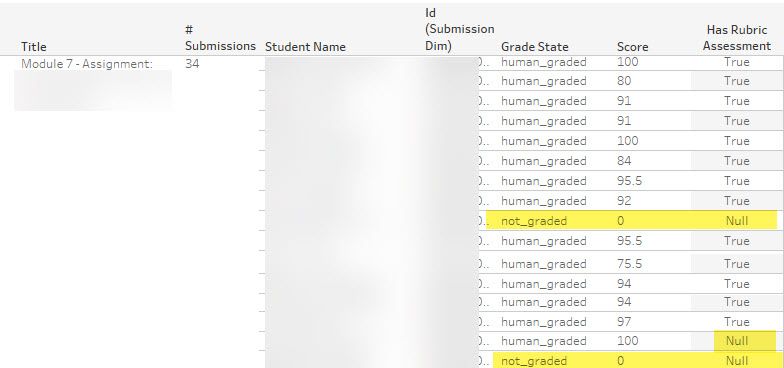

I also need clarification on a few submission_dim fields. To explain, for the majority of our courses we have the grade book policy set to automatically enter a zero for when the student does not enter a submission by the due date. If the grade settings are set to automatically enter a 0, and the student fails to submit by the due date, the submission_dim.grade_state field shows not_graded. But this was graded automatically as it received a zero due to the grade book settings. So should this then be auto_graded instead? To me, not_graded means there is no grade assigned, 100, 0 or otherwise.

In addition, if the instructor does enter the grade but does not use the rubric associated with the assignment, the submission_dim.has_rubric_assessment field shows as Null when I believe it should be False. That is, if the assignment has an associated rubric and the instructor does use it it should be True; when the instructor does not use the associated rubric it should be False, and when no rubric is associated with the assignment, Null should show. For reference: https://portal.inshosteddata.com/docs#submission_dim

The desirable end product is a report that I can provide to college leadership that shows a) the high level view of the trends on how rubrics are being used and b) the ability to drill down to see who is and who is not using the rubrics when grading, be it by program, course, or down to the instructor level.

Being able to determine if rubrics are being used is important as the use of the rubrics is required by policy. Also not completing the rubrics causes gaps in the data set which then creates issues such as decreased validity/reliability, additional manual work processes, etc.

The current structure makes this reporting difficult for the current reasons.

---

ps. a view of my Tableau troubleshooting workbook. I removed all the filters to view all the courses for a term and 'False' doesn't appear once in the thousands of submissions for over 350 courses.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-29-2019

07:15 AM

I don't often reply to my own posts...

Support has informed me the field 'submission_dim . has_rubric_assessment' is being eclipsed. I wonder what can be created to replace it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-09-2019

11:38 AM

The quiz_submission_dim has a due_at field that integrates the override data into the record so we can see how long it took a teacher to grade the submission.

We would love to have that same field on the submission_dim as well. Seems like a quick add that would be very useful in building dashboards for teachers.

In general we are looking to answer the question: "How long does it take me to grade assignments?"

see the reference thread:https://community.canvaslms.com/ideas/15149-canvasdata-add-dueat-field-to-submissions Community help

Community help

To interact with Panda Bot, our automated chatbot, you need to sign up or log in:

Sign inView our top guides and resources:

Find My Canvas URL Help Logging into Canvas Generate a Pairing Code Canvas Browser and Computer Requirements Change Canvas Notification Settings Submit a Peer Review AssignmentTo interact with Panda Bot, our automated chatbot, you need to sign up or log in:

Sign in

This discussion post is outdated and has been archived. Please use the Community question forums and official documentation for the most current and accurate information.