Exporting Live Events to SQL database with Fluentd (WIP, Contributions and Testers needed)

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Inspiring by @robotcars 's solution to export SQS (Live Events) to SQL (LEDbelly), I'm working on a similar concept.

However, by using Fluentd (open-source), we can eliminate the need for Amazon SQS. Benefits of using Fluentd (What's is Fluentd?) are:

- It's FREE and fast

- Flexible and Extensible (Lots of plug-ins for input/output data - E.g. you can set up an email notification flow based on data received)

- Self-host, thus you have total control over data being sent from Canvas

- It can process a large amount of data with minimal system resources (E.g. 30-40MB of memory to process 13,000 events/second/core)

- Ability to route live data from different Canvas's accounts or sub-accounts to different output

- and maybe more...

Requirements

- Linux server (or Docker instance) that is accessible via the internet

- SSL certificate (free via Let's Encrypt)

Setting Up

A. Set up Database and Fluentd

1. For ease of setup or transition and future updates, I aligned all database schema with LEDbelly's. You can follow the instruction on LEDbelly's GitHub to set up your database (https://github.com/ccsd/ledbelly/wiki/Getting-Started - you will only need to edit the database config file and follow up to step 5)

2. Next, we need to install Fluentd. I use Fluentd-UI to set up Fluentd as it's easier to view logs and config (Alternatively, you can set up Fluentd as a standalone). Enter the following commands to your terminal

If you don't have Ruby Gem on your system, please follow the instructions here to install: https://www.ruby-lang.org/en/documentation/installation/

$ gem install fluentd-ui

$ fluentd-ui setup

$ fluentd-ui start --daemonizeYou can now access Fluentd-UI via your web browser at http://your_server_ip:9292/ .The default account is username="admin" and password="changeme".

3. Once login, click "Install Fluentd". Fluentd and its config file will be located in /your_linux_user/.fluentd-ui/

4. Install plug-ins

#SQL input plugin for Fluentd event collector

$ fluent-gem install fluent-plugin-sql --no-document

$ fluent-gem install pg --no-document # for postgresql

#fluent plugin to rewrite tag filter

fluent-gem install fluent-plugin-rewrite-tag-filter

5. Open fluentd-ui folder and pull config files from my repo

cd ~/.fluentd-ui

git clone https://github.com/jerryngm/fluentd-canvas-live-events-to-sql .

(Please note the period "." at the end of git clone command, this will pull files to the base of fluentd-ui folder)

Then remove the default fluent.conf file

rm fluent.conf

6. Edit fluent.conf.example and save as fluent.conf.

Look for this tag '#your_config_here' and the tag next to it to change the settings accordingly:

- #http_port - endpoint port to receive JSON live data from Canvas (If you have a firewall on your server, you will need to open this port)

- #ssl_cert - enter the path to your domain's SSL (SSL cert can be self-signed or obtain for free from Let's Encrypt - How's To)

- #database_config - config your database here

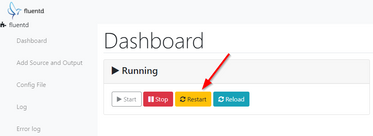

7. Open Fluentd-UI again and press "Restart"

B. Set Up Canvas Data Service

1. Open "Data Services" from your account/or sub-account Admin page

2. Click "+ Add" button

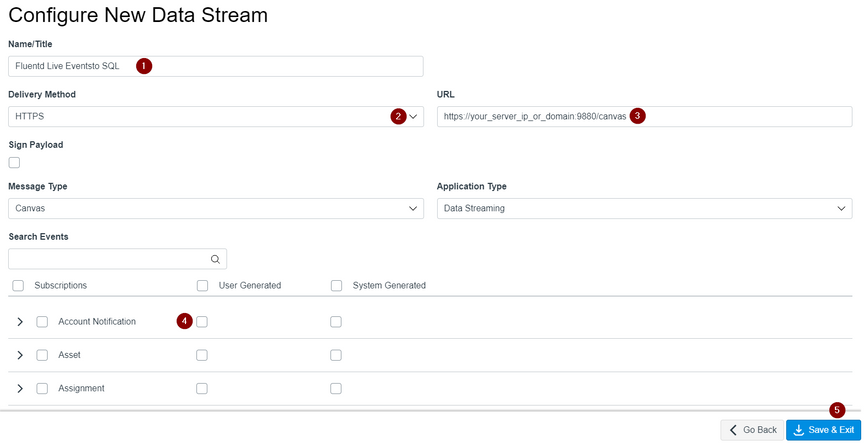

3. Config as follow:

- Enter stream name

- Select "HTTPS"

- Enter Fluentd endpoint. E.g. https://[your_server_ip]:fluentd_http_port/canvas (/canvas is the tag name that I use in my config)

- Select events (as many as you like) that you want to subscribe

- Press "Save & Exit"

Congratulation 🎉, it's now up and running. You can test it by creating an account announcement or a discussion topic.

Open your database and run the following query to see your live events data 😏

select * from live_discussion_topic_created

or

select * from live_account_notification_created

How Its Works

Fluentd documents are located here https://docs.fluentd.org/

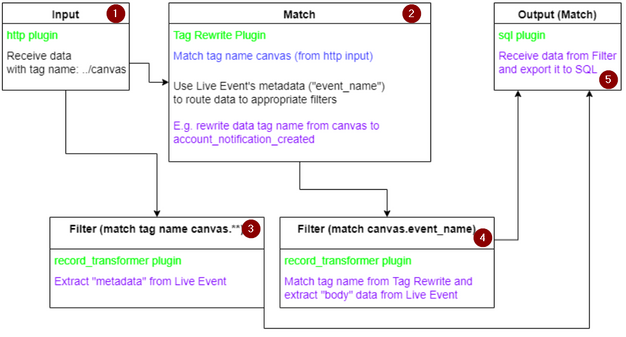

In short, Fluentd consists of 3 main components:

- Input - in our case, we use http as an endpoint to receive JSON data from Canvas Live Events

- Filter - extract or manipulate data

- Output (match) - once data is extracted/manipulated we can then store it (in a database) or trigger an action (e.g. email notification)

Each components process data through various plug-ins

Fluentd Config File

Settings for input/filter/output and its plug-ins are all configurable in a single fluentd.conf file (or multiple .conf file by using '@include con.conf' in the main config file)

Each data received by Fluentd is assigned a tag name (In our case, it's defined by Fluentd's http endpoint which is /canvas)

Data will route through the config file using that tag name

Visualisation of our config flow

- Located in the main config file: https://github.com/jerryngm/fluentd-canvas-live-events-to-sql/blob/main/fluent.conf.example

- Located here: https://github.com/jerryngm/fluentd-canvas-live-events-to-sql/blob/main/config/canvas_tagrewriterule...

- Located here: https://github.com/jerryngm/fluentd-canvas-live-events-to-sql/blob/main/config/filter/metadata/canva...

- Located in "filter" folder: https://github.com/jerryngm/fluentd-canvas-live-events-to-sql/tree/main/config/filter

- Located in "output" folder: https://github.com/jerryngm/fluentd-canvas-live-events-to-sql/tree/main/config/output

To-do list

There are still a lot of works to be done for this project. As you can see I only have the filter and output config files for two (2) event types. Works to be done are as follow:

- ✔️ Filter config files to extract data for each live event (template here.....) (LEDbelly body data for each event)

- ✔️ Output config files to store data to each table (templater here......) (LEDbelly scheme for each table)

- 🆕 Test config files

- 🆕 Batch script to setup Fluentd automatically

- Filter and config files for Calipher message type

- README file and Wiki for our repo

- @robotcars it would be great if we could write a script/or Github action to update Live Event's schema for both of our repo once your's is updated

- A new catchy name??

Please contact me if you want to maintain or contribute to this project. Thank you 😎

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.